Introduction to Asynchronous Processing

Client-server communication is one of the core operations in software development today. A system (the client) can request work to be done on another system (the server) by sending messages between each other and communicating the outcome of the requested work. Managing this communication can easily grow in complexity on the network layer when you begin to manage the rate at which messages are sent, the amount of requests a service can handle at a given time, and the speed at which the client requires a response.

In this post, we look at the two methods of managing communication between networked applications, and how to solve some of the problems that come with scaling the process.

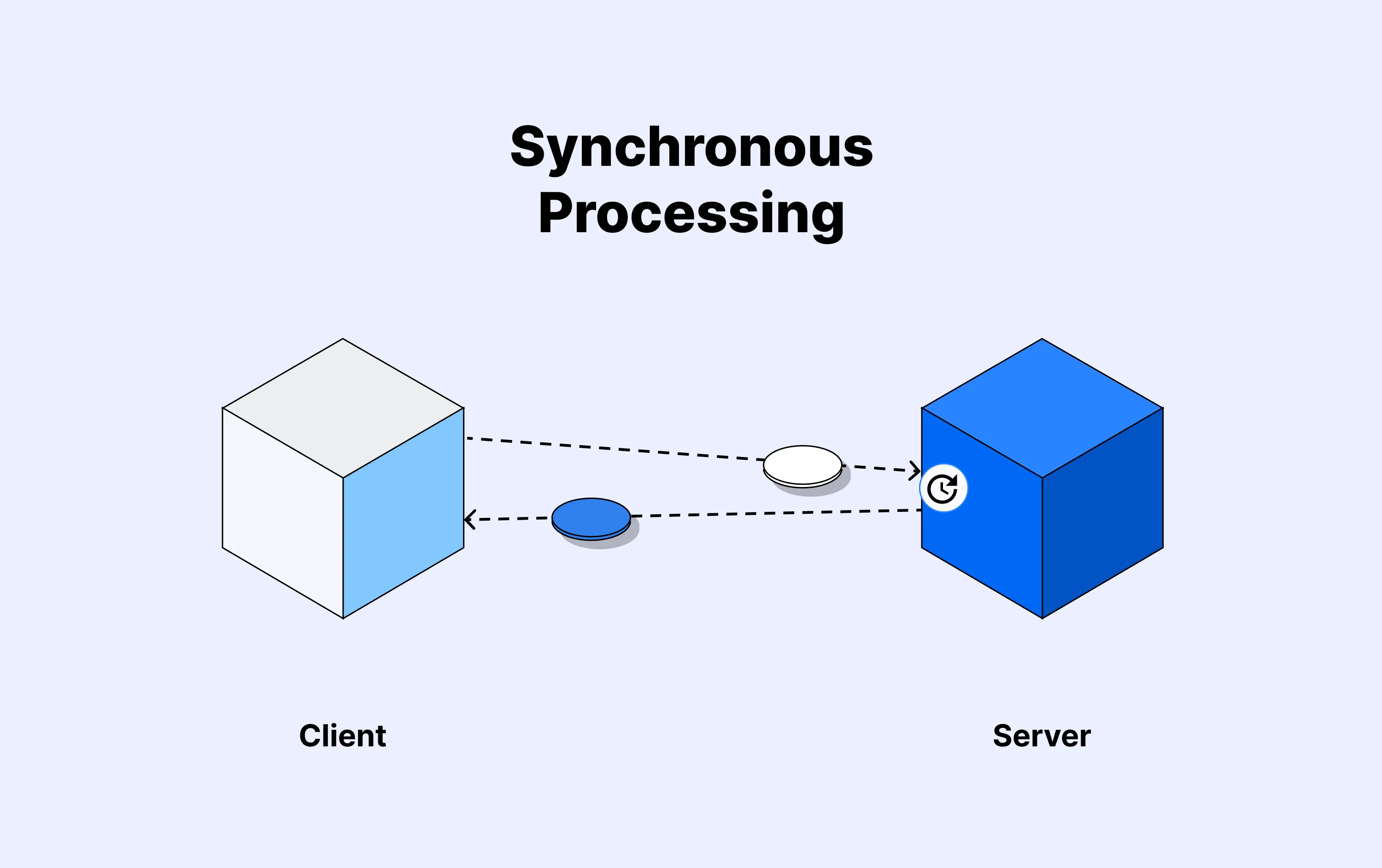

What is synchronous processing?

Synchronous processing is the traditional way of processing in client-server communication. A client sends a request to a server and waits for the server to complete the job and send a response before the client can continue doing any other work. This process is often referred to as blocking (i.e. the client is blocked from doing any other work until a response is received).

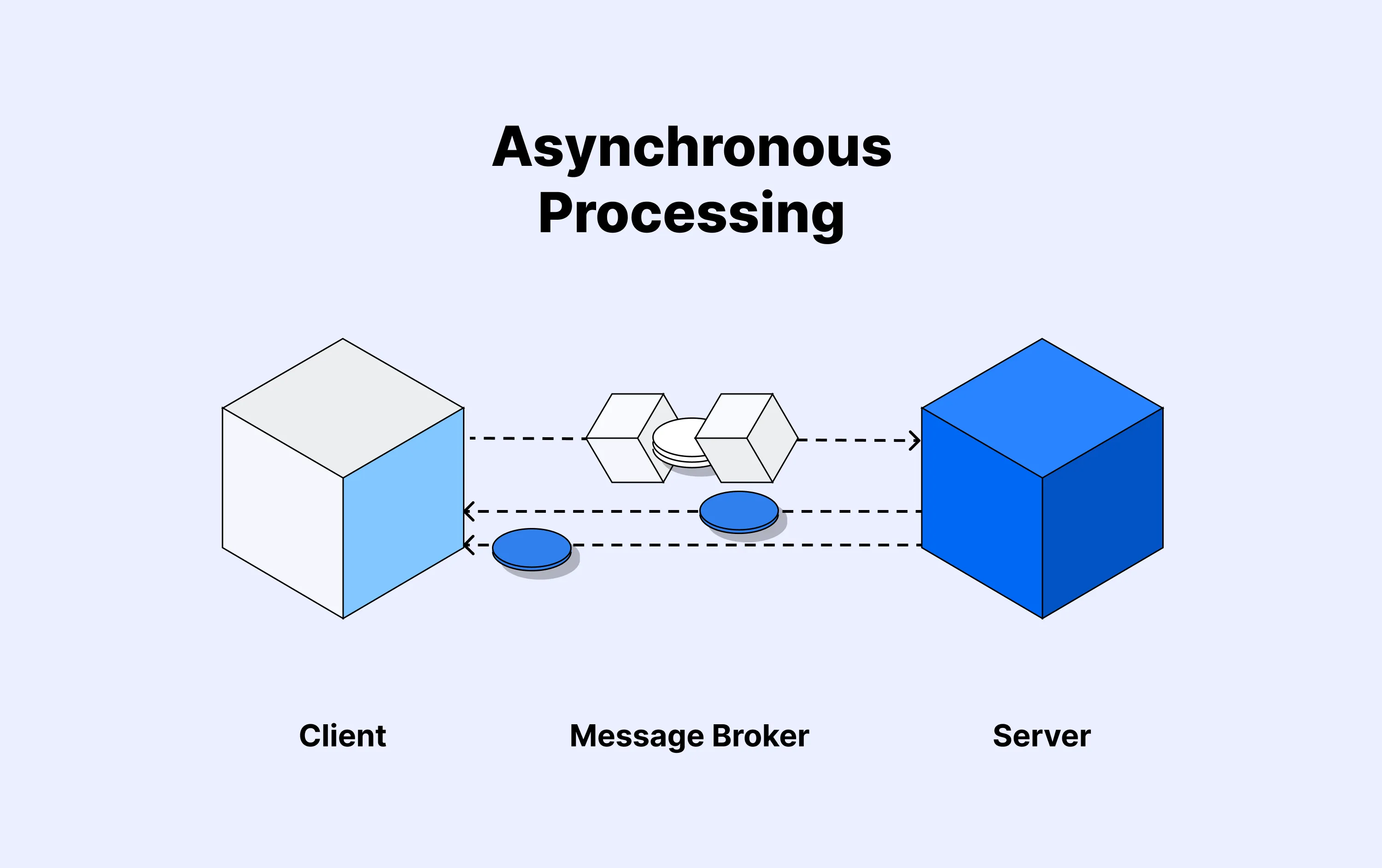

What is asynchronous processing?

Asynchronous processing is the opposite of synchronous processing, as the client does not have to wait for a response after a request is made, and can continue other forms of processing. This process is referred to as non-blocking because the execution thread of the client is not blocked after the request is made. This allows systems to scale better as more work can be done in a given amount of time.

Differences between synchronous and asynchronous processing

Now that we understand what synchronous and asynchronous processing are, let's compare the two to see how asynchronous processing offers more benefits than its counterpart:

- Synchronous requests block the execution thread of the client, causing them to wait for as long as the requests take before they can perform another action. On the other hand, asynchronous requests do not block and allow for more work to be done in a given amount of time.

- The blocking nature of synchronous requests causes the client to consume more resources as the execution thread is locked during the waiting period. Asynchronous requests immediately free up the execution thread to perform more functions without having to wait for a response.

- Because there is no way to determine how long a request will take, it is difficult to build responsive applications with synchronous processing. The more blocking operations you perform, the slower your system becomes. With asynchronous processing, response time is quick as the client does not have to wait on the request.

- Failure tolerance of asynchronous processing is higher than that of synchronous processing, as it is easy to build a retry system when a request fails and the client is not held back by how many times the request is retried or how long it takes.

- Asynchronous processing allows you to achieve parallel execution of client requests, while synchronous processing requests can only be processed sequentially.

How to achieve asynchronous processing

The section above illuminated that asynchronous processing is the way to go when it comes to building scalable and highly responsive applications. However, all the benefits of asynchronous processing come at a cost to the architecture of the system.

Synchronous processing is a straightforward process. You don't need to think too much about it to achieve it - it's the default behavior in client-server communication.

To achieve asynchronous processing, a middle-man needs to be inserted between the client and the processing server. This middle-man serves as a message broker between the client and the server, ingesting the request messages and distributing them to the server based on the load the processing server can handle at any given moment. The client gets a quick response once the request message is ingested, and can continue doing other activities without waiting for the server.

Asynchronous processing architectures offer callback endpoints that can be called when a response is ready for the request made.

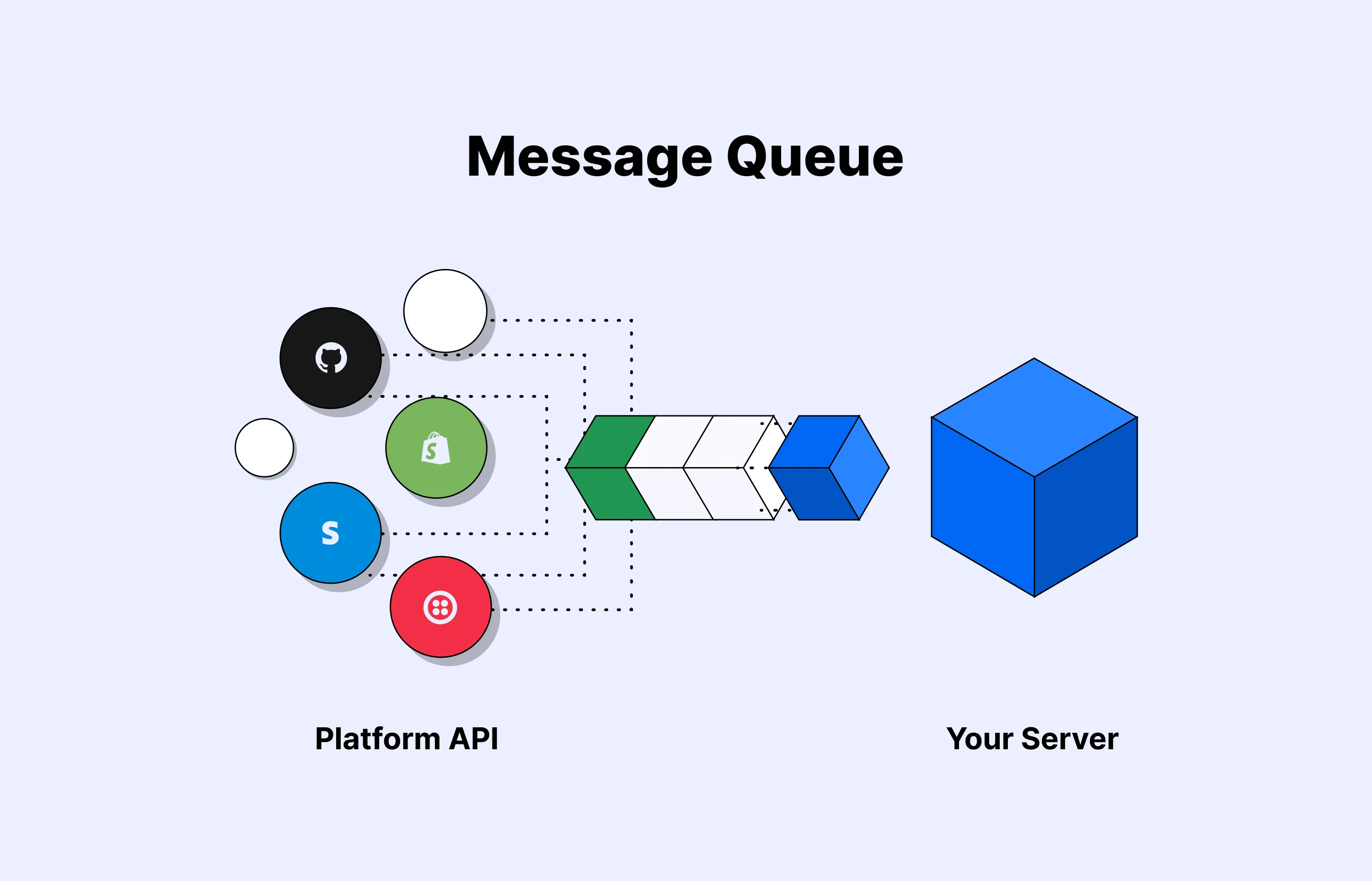

One middle-man in today's asynchronous processing architectures is the message queue. Another alternative is using a publish/subscribe system like an event bus.

Conclusion

Asynchronous processing introduces a non-blocking request handling process into your applications, giving you a more decoupled system that can be easily scaled for performance. In this post we have looked at the benefits of asynchronous processing. Learn how to achieve it by using message queues.

To quickly and easily implement asynchronous processing in your applications, you can start using Hookdecktoday.