Handling Webhook Spikes for Shopify Store

Congratulations! Your Shopify e-commerce site is beginning to attract an impressive amount of customers. Sales have been surging to new heights, and everyone is excited. However, with growth comes responsibility and unlike before, you're beginning to experience spikes in web traffic and scalability burdens that present new challenges.

In this article, we will look at one of these capacity problems — growth in Shopify applications —and explore different solutions for a quick and scalable change to mitigate the pressure that comes with it.

The Flowerify store

So let's say you're a developer at Flowerify Inc., a startup that runs an online store built with Shopify that sells flowers and delivers them to various customer-ordered destinations. Because Flowerify also handles delivery of customer orders, you have set up the online store in such a way that when a customer makes an order, a webhook from the Shopify store calls an endpoint on a delivery service API running on a server you manage.

The delivery service API takes care of collecting the order information, sending the customer an invoice, and alerting the delivery department about the purchase and items to be delivered.

On a regular day, Flowerify receives an average of 500 orders, with peak periods having the Shopify store make up to 50 concurrent requests to the delivery service API. Quite a fair amount of traffic for your 4GB RAM server running on one CPU hosted on AWS EC2 to handle, but really nothing to worry about.

Scalability problem

With Flowerify's current setup and patronage, processing orders and deliveries has been good and customers are satisfied. Then suddenly, the week of Valentine's Day rolls by and daily flower orders shoot up to 15 000, with peaks of 1000 to 3000 concurrent requests; every one across the country is sending their loved ones flowers, and old customers are recommending Flowerify to their friends and colleagues as the best flower delivery service in town.

This growth is obviously an exciting one for the company, and the numbers look good, but the same cannot be said for the server the delivery service is running on as it now has to process up to a thousand requests concurrently from the Shopify store.

Due to the increased pressure on the server, requests start to time out and fail, and at certain points the server exhausts its capacity and has to shutdown and restart before it can start serving requests. This situation causes many orders to drop and not make it to the delivery department and as a result, lots of customers do not get their flowers delivered.

Imagine the level of damage and loss if your online store disappoints customers on Valentine's week...your CEO would definitely not be pleased.

Comparing different solutions

You obviously have a scalability problem and you need to act fast in order to serve your customers and save the business. So let's look at different solutions that can be deployed to save the situation.

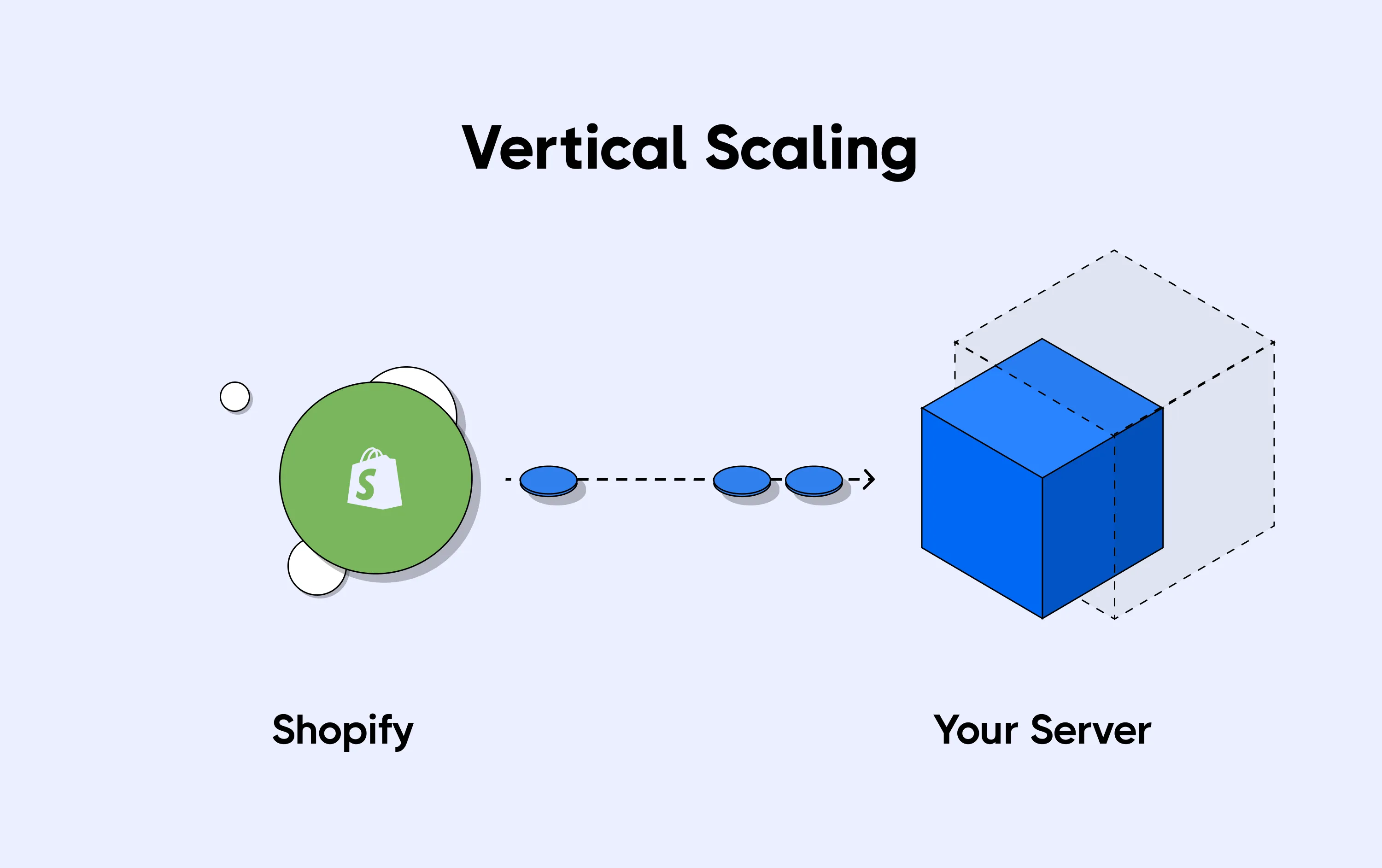

Vertical scaling of server

The easiest but least effective way to solve the problem is by making the server stronger. Your current server is running on 4GB RAM, one CPU server and hosted on AWS. To make this server more powerful, you need to add more memory and CPU cores. Increasing the capacity of the server (vertical scaling)

Using the price calculator for an AWS EC2 instance, below are the costs of scaling up the server.

| Memory (RAM) | Number of CPU | Cost per Month(dollars) |

|---|---|---|

| 4GB | 1 | 18.60 |

| 16GB | 4 | 64.54 |

| 32GB | 4 | 95.71 |

| 32GB | 8 | 126.08 |

| 64GB | 8 | 188.42 |

As seen in this table, the first row is Flowerify's current hosting bill per month, and it grows exponentially with increasing server capacity as we go down the table. The cost implication of going with this option is obviously high, and might not fit your budget.

Even if you have the budget for it, there is still a bigger problem with this approach.

Inasmuch as increasing the capacity of the server would enable the server do more, it has a hard limit on the type of problem at hand, as little impact will be felt with increasing server capacity.

The issue with failing requests is a concurrency problem and not an issue of how much compute power the server can offer. Thus, it would only make a server serve a request faster, but no matter how powerful the server is, an increasing number of requests will still run it down.

Convincing the company to spend more money for little impact is probably not an argument that will fly with your team members, so this solution may not be your winner.

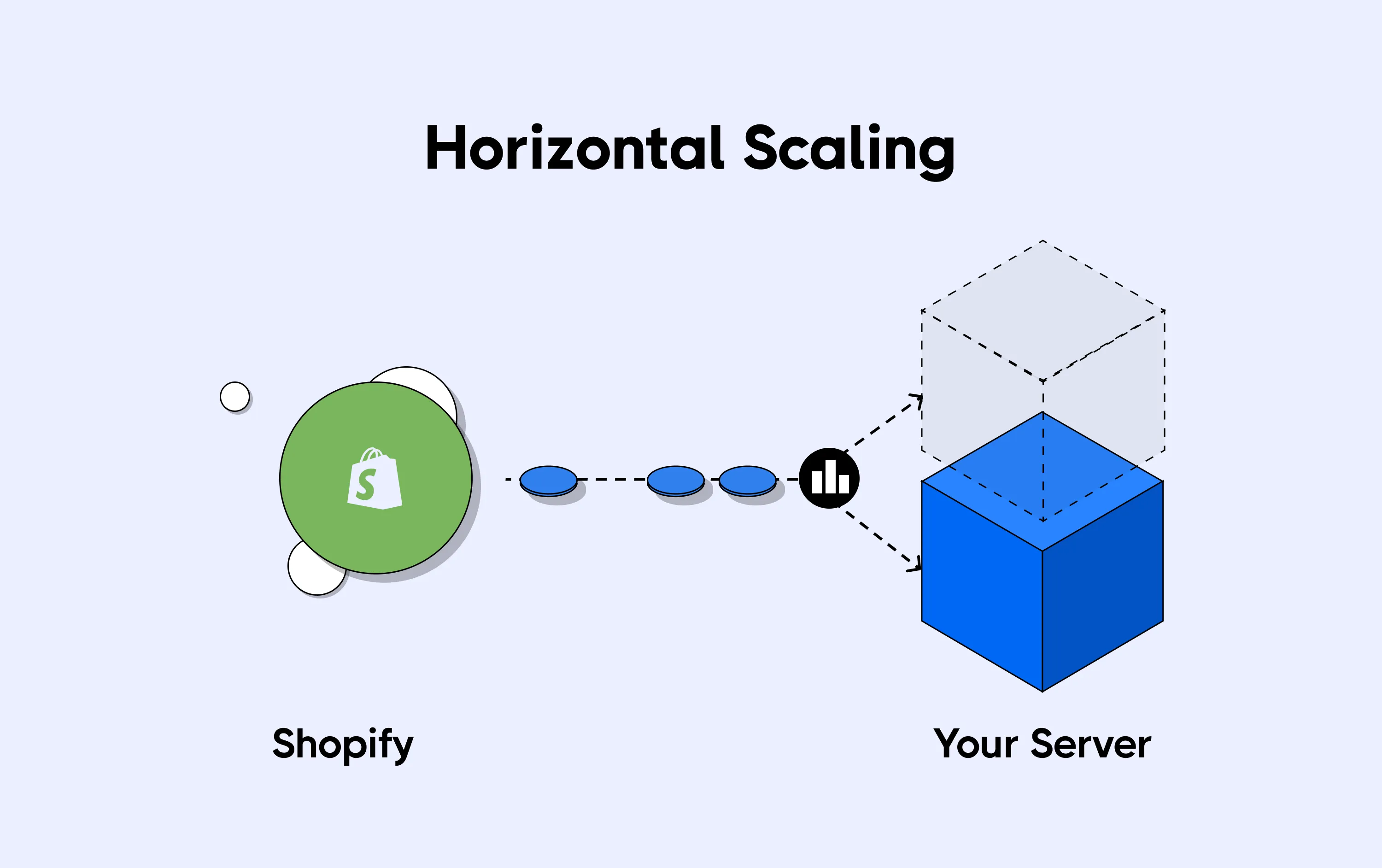

Deploying more servers (horizontal scaling)

The problem you have with spikes in traffic is a network heavy issue and not a compute heavy one. You don't need more power, you need more servers to distribute the load to.

By deploying more instances of the delivery API server, you can spread the load coming from the webhook requests to more servers so that a single server does not get overwhelmed and shut down.

This load distribution strategy can be done using a Load balancer that sits in front of multiple delivery API instances and shares (proxies) the requests coming from the webhooks to the API instances. This is a very efficient solution to the spikes Flowerify is experiencing, so let's look into what it takes to set this up.

As efficient as this solution is, it can take quite some time to set up and troubleshoot properly for reliability (up to a week). With your current burden of releasing new features almost every week and other pending bug reports and support tickets to deal with, you don't have the capacity for such a large time investment.

Also, keep in mind that Flowerify also cannot make this time investment, based on the situation on ground. Valentine's Day will blow away soon but the damaging effects of the current predicament will be long lasting.

Asynchronous Processing

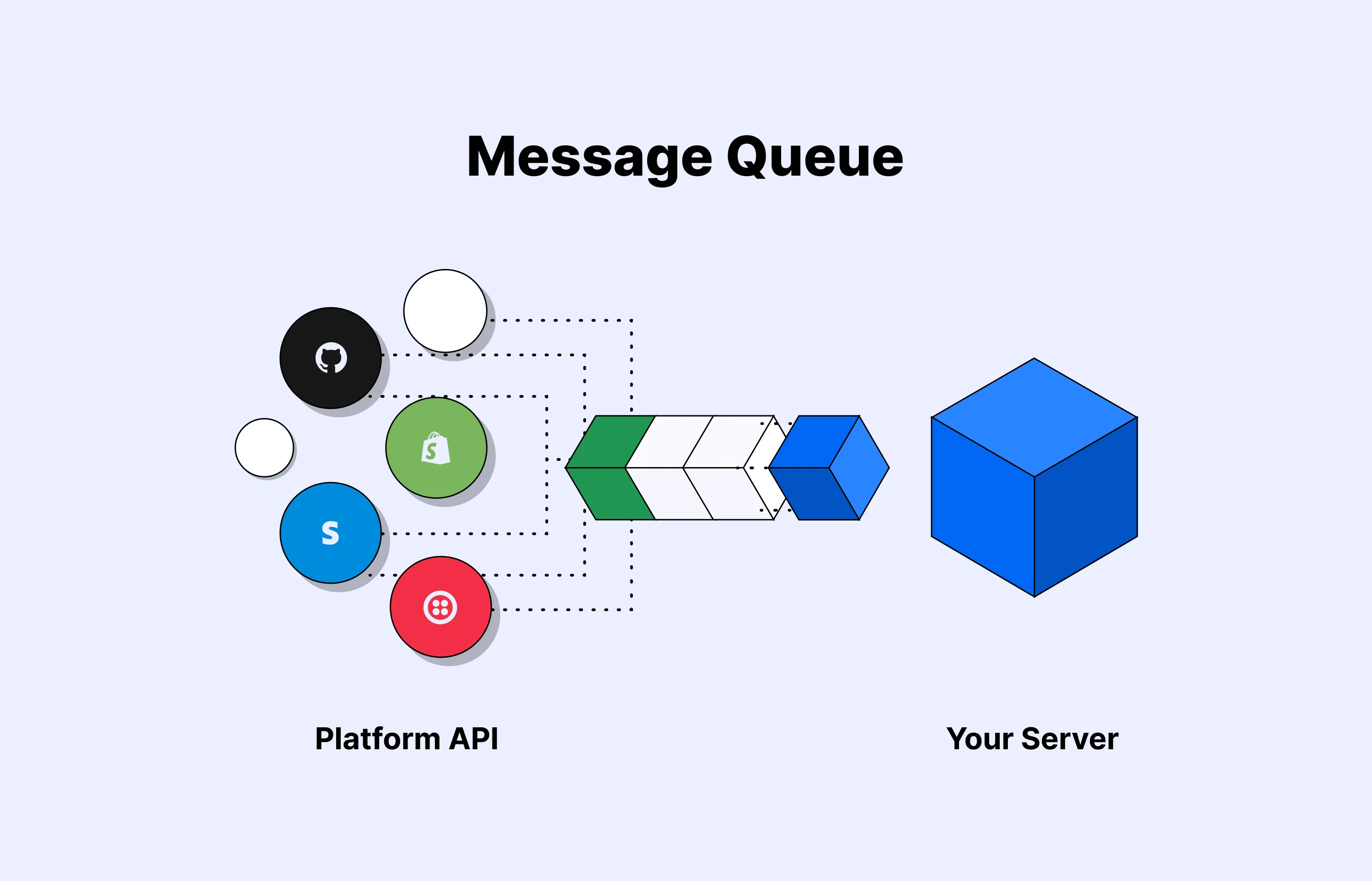

Consider looking at the problem from another perspective. This time, instead of focusing on the server by trying to make it stronger or deploying more instances, focus on the process by which webhook requests from the Shopify store are handled.

A brilliant solution is to find a way to ingest the heavy traffic coming from the Shopify store and forward it to the server at a rate it can handle. The ingesting system can then queue up the requests and forward them to the server, making sure that no request drops or fails due to server overload. This asynchronous processing strategy for working with webhooks is explained in great detail in this post.

One architectural pattern comes to mind and that is request processing using Message Queues. Message queues help introduce an asynchronous request processing pattern that helps ingest growing network traffic and forwards it to the desired destination.

Simply put, you install a message queuing system in between the Shopify store and your delivery API server. When request spikes occur, the message queue ingests the traffic and forwards the requests to your delivery API server at a rate it can handle, until every single request is dealt with.

This is the preferred way to go as it fixes the problem right away, and you don't need to spend more on increasing server capacity or deploying more servers. However, you still need to implement the message queue. This is where Hookdeck comes in.

Hookdeck saves the day

Hookdeck is an Infrastructure as a Service (IaaS) for webhooks that allows you to benefit from message queues without having to write a single line of code. Hookdeck sits in between your webhook requests and processing server to queue your requests and serve them to your endpoint based on the load your API can handle.

Hookdeck can be set up in just a few steps with no installation required or professional help needed. You can quickly and easily plug Hookdeck in between your Shopify store and delivery API and get a highly performant message queue system, request tracing, request logging, alerting, retries, error handling, and an intuitive dashboard to search and filter requests.

Follow these simple steps:

- Signup for a Hookdeck account

- Add Shopify as the webhook provider

- Add the API destination endpoint for the delivery service

- Replace the destination endpoint in Shopify with the one generated by Hookdeck

The best part is that you can begin using Hookdeck for free and scale up as needed, serving up to 3 (three) million requests for as low as $15. Saves time, saves money.

Flowerify can save its business in a matter of minutes and continue growing as one of the most promising startups.

Conclusion

Products are built to serve customers, and customers want to be served as fast as possible. The ability of a product or service to adjust its capacity to cost-efficiently fulfill the demands of its users is what sets it apart from others.

Handling more users, clients, transactions and requests without affecting the overall user experience is a capacity that you have to be proactive about in order to not lose business, and with Hookdeck, you can start making the right decisions today.