Lessons From Hookdeck’s Quest for Scalability

Lately, we have been working hard on Hookdeck’s scalability. We recently onboarded a few customers who significantly increased the number of daily events that we ingest, which meant it was time for us to tackle operating Hookdeck at scale.

I’ve spent a good deal of my career studying and improving applications’ performance, so getting back into the engineering side of this has been a lot of fun! We hadn’t previously invested much time or energy in scalability because we were focused on the product itself, its features, and on making sure that we could get adoption.

As a founding engineer at Hookdeck, I’ve come across many obstacles on the frontlines. Fortunately, with the right team, tools, and experience, these issues can be overcome. Here is the story of how we honed in on performance optimization, specifically operating Hookdeck at scale.

The issues…

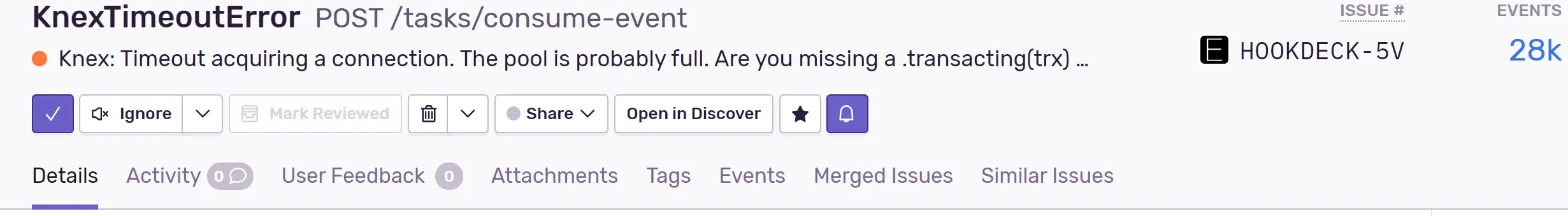

The first issue that needed fixing manifested as a Sentry alert – a database timeout.

Sentry is a great tool that we use internally at Hookdeck to track performance issues. One of its remarkable features is that it allowsyou to track your front-end and your back-end performance alike.

That specific alert signaled errors that we started seeing randomly, but we never lost an event because we built the app following the 12-factor app guiding principles.

In this case, we implemented our workers based on the disposability principle. The workers responsible for ingesting events do not acknowledge it in case of an error, thus returning it to our PubSub queue.

The second issue manifested itself in the form of out-of-memory errors on some of our worker pods in our Kubernetes cluster. We became aware of this issue because we were seeing alerts in our cloud console. Again, thanks to the way we implemented things, expecting a container to fail at any moment, we were able to avoid the loss of data despite the crashes.

It’s (almost) always the database

Back in my consulting days, when a client hired us to tackle a performance optimization problem, the first thing we looked at was the database. And most of the time, that’s where we stopped looking because it’s where we found the problems.

But when diagnosing performance issues, it’s important to note that there are roughly three types of resources that a computer consumes: CPU, Memory, and I/O. If you’re running an isolated algorithm that makes complex calculations, you’re likely using CPU and memory. But when you’re fetching information from another source such as a database or a cloud storage bucket, you’re consuming I/O.

We use PostgreSQL as a database, and we use Aiven as a service to host it (which I strongly recommend, these guys are fantastic). Aiven provides basic but powerful tools that helped us diagnose our performance issues.

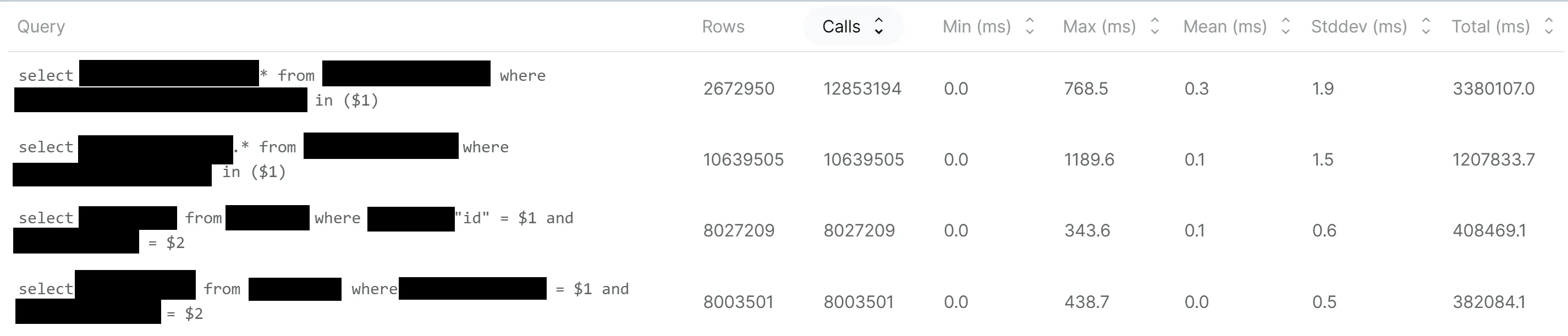

We dug into our query statistics and looked at the total time, a number that represents the amount of time spent on that specific query if it were the only one running on the server. We found a few queries executed very often (basically for every event that we ingest). We proceeded to optimize them using PostgreSQL’s EXPLAIN functionality (I strongly recommend that you learn how to use it, it will save you at some point - guaranteed).

We realized that a few of our more read-intensive queries were missing some key indexes. After fixing our indexes (and rewriting some other queries that were slow), the queries that our code executes most often now have a mean execution time between 0.1ms and 0.3ms! This is compared to an average of between 0.5 seconds and 3 seconds before the optimizations.

This optimization ultimately resolved the timeout errors that we were experiencing. This makes sense because the connections to the database are held for much less time by our executing code, thus freeing them up for subsequent queries.

A small word of caution: if indexes were the solution for everything, we’d add them everywhere on every single column. The reason that we don’t is because indexes are also expensive. They:

- May take a lot of space depending on the size and cardinality of the data that you store in them; and

- Impact write performance (insert, update, delete) because the database engine has to update the indexes instead of just updating data in the table.

Should we have tackled database optimizations earlier?

No! I believe that, as an engineer, you have to walk a fine line between under-optimization and over-optimization.

If you’re working in a large software development team and are part of a company with access to resources, you’re probably performing some load/stress test before pushing a feature out to production.

But, at least in the context of a startup where you’re looking to move fast, optimizing early means:

- Less time to build; and

- Focusing on an optimization for code that might not get used as much as you expect.

Another reason to avoid over-optimization is that most database interactions are carried out through some abstraction layer. Also, during development, you’re not sure what feature of your application will get traction. Therefore you won’t know beforehand what to optimize.

A common mistake I see often is choosing which query to optimize - if one query takes 3 seconds on average, and the other takes 1 second, which one do you tackle first? The answer lies in how your code calls them. If your 3-second query executes twice a day, and your 1-second query executes 10,000 times a day, focus on the one that takes 1 second. Tools such as Aiven’s query statistics are essential to diagnosing such issues.

Hunting down our out-of-memory issues

At Hookdeck, we write code in TypeScript and we run Node.js. We did our homework and knew our average message size and how many messages we could process in parallel – or so we thought. So when we started seeing out-of-memory errors on some of our Kubernetes pods, we were a little surprised.

This issue proved to be a little trickier to tackle.

In an ideal scenario, our workers would never crash. Instead, our messages would get queued up in our message queue. After all, that’s what it's for!

We built a notification system for such events; if the queue gets backed up beyond a certain threshold, we immediately get notified to take action. In this case, we would scale up the workers to ingest more quickly or troubleshoot if we feel that it’s an anomaly.

We use Google Pub/Sub as our message queue. Its Node.js SDK exposes a setting for flow control designed to avoid overwhelming workers. We had that value configured to 500 in our tests.

We tried isolating the problem in our staging environment, a close replica of production. Since we run on Kubernetes, every code change or instrumentation meant we had to do a new deployment and parse through many logs during load testing. It works, but it’s not ideal.

Instead, we ended up building a Docker-based local environment, which taught us a lot about how Node.js and our app manage memory.

Start testing

We started with no resource (CPU and memory) limits. We expected that processing a fixed amount of messages (let’s say 1000) with an average message size of 10k would result in 1000*10 = 10000Kb ~= 10Mb of memory utilization. Under load, Node.js kept claiming available memory but then would release it when the load test was over.

Then, we introduced resource limits and reran our tests. That setup is closer to our production setup, since we limit memory on our pods to a fixed amount. This time, under load, we started seeing OOM exceptions.

One thing to note about how our workers pull data is that a message consists of an identifier and some other (small) pieces of metadata. We store the message body in another database to avoid our messages getting too large (and therefore too expensive as the Pub/Sub service calculates pricing based on data transferred).

Gradually, we lowered Pub/Sub’s “maxMessages” setting and observed the memory consumption behavior using docker stats. Memory exhaustion slowed down but kept occurring.

We discovered the allowExcessMessages boolean setting in Pub/Sub that prevents pre-loading messages and is “true” by default. Setting this to "false" got the memory utilization under control, as the queue stopped pre-pulling messages. It was good news as we found the issue, but it also meant that we couldn’t benefit from the message pre-pull optimization. We gradually raised “maxMessages” and monitored memory usage, which is now working as expected.

Other findings

During the diagnosing of our memory issues we used several tools, including profilers and APM. One that was particularly helpful was the integrated node profiler. It’s a sample-based profiler (as opposed to event-based or code instrumentation). They work by collecting samples of the call stack periodically. The aggregate gives you a picture of where your program is spending most of its time.

We confirmed a few suspicions of our program spending time in I/O. We realized that calls to some infrastructure API services could take 3-5 seconds in some rare instances, which we confirmed later with some additional logging.

In conclusion

There are a lot of ways to approach performance optimization. It is a complex problem, but we are lucky enough to have the tools to help. Tooling is essential to solve these issues. At the very least, you have to monitor your resource utilization. Luckily if you are on one of the major Cloud providers, monitoring tools are easily accessible (GCP or AWS).

Always pay close attention to your I/O - they could be the source of a lot of issues. High memory could just be a sign that your I/O operations are too slow, and data is staying in memory for too long. Avoid premature optimizations, as you may end up chasing issues that are not real production issues.

Happy building!