Webhook Retry Best Practices for Sending Webhooks

Webhook delivery will fail. Networks drop packets, servers crash mid-request, endpoints become temporarily unreachable during deployments, and rate limits get exceeded. The difference between a frustrating service for outbound webhooks, and a reliable one, comes down to how you handle these failures.

This guide covers the retry strategies, backoff algorithms, and operational patterns that make outgoing webhook delivery fault-tolerant without overwhelming your infrastructure or your customers' endpoints.

Why Retries Are Non-Negotiable

A single webhook delivery attempt has many points of failure: DNS resolution, TCP connection establishment, TLS handshake, request transmission, server processing, and response transmission. Any of these can fail due to transient issues that resolve in seconds or minutes.

Without retries, a brief network hiccup or a 30-second deployment window means lost events. For webhooks carrying payment confirmations, inventory updates, or user actions, lost events translate directly to broken business processes and unhappy customers.

Eliminating failures isn't possible, so instead, your solution for sending webhooks needs to make failures recoverable.

The Anatomy of a Retry Strategy

A complete retry strategy answers four questions:

- When to retry: Which failures are worth retrying?

- How often to retry: What's the delay between attempts?

- How long to retry: When do you give up?

- What happens after: Where do exhausted events go?

When to Retry: Classifying Failures

Not all failures deserve retries. Classify HTTP responses into three categories:

Retriable failures (temporary issues, worth retrying):

5xxerrors (500, 502, 503, 504): Server-side problems that often resolve quickly- Connection timeouts: Endpoint was reachable but didn't respond in time

- Connection refused/reset: Endpoint temporarily unavailable

- DNS resolution failures: Temporary DNS issues

Non-retriable failures (permanent issues, don't retry):

4xxerrors (except 429): Client-side problems that won't fix themselves400 Bad Request: Your payload is malformed401 Unauthorized: Credentials are wrong or revoked403 Forbidden: Endpoint rejects the request404 Not Found: Endpoint doesn't exist410 Gone: Endpoint was permanently removed

Rate-limited (special handling required):

429 Too Many Requests: Endpoint is asking you to slow down. Honor theRetry-Afterheader if present. Otherwise, back off aggressively before retrying.

Retrying permanent failures wastes resources and can look like an attack to the receiving endpoint. Move them directly to your dead-letter queue for investigation.

Exponential Backoff: The Foundation

Linear retry intervals (retry every 30 seconds) are problematic. If the endpoint is down, you're hammering it constantly, potentially slowing its recovery. If it's rate-limiting you, you're making things worse.

Exponential backoff solves this by increasing the delay between each retry attempt:

Attempt 1: Wait 1 second

Attempt 2: Wait 2 seconds

Attempt 3: Wait 4 seconds

Attempt 4: Wait 8 seconds

Attempt 5: Wait 16 seconds

...

A simple formula will suffice: delay = base_delay * (2 ^ attempt_number)

This gives failing endpoints breathing room to recover while still retrying persistently.

Capping the Maximum Delay

Unbounded exponential growth eventually produces absurd delays. After 10 attempts with a 1-second base, you'd be waiting over 17 minutes. After 20 attempts, over 12 days.

Cap your maximum delay to something reasonable for your use case. A common pattern is capping at 1 hour, then continuing to retry at hourly intervals until exhausting the retry window.

Jitter: Preventing the Thundering Herd

Exponential backoff has a subtle problem. If many webhooks fail simultaneously (common during an outage), they'll all retry at exactly the same intervals. When the endpoint recovers, it gets hit with a synchronized wave of retry traffic that can trigger another failure.

This is the thundering herd problem, and jitter solves it by adding randomness to retry intervals.

There are several jitter strategies:

Full jitter: delay = random(0, exponential_delay)

- Spreads retries across the entire range

- Most effective at preventing synchronized spikes

Equal jitter: delay = (exponential_delay / 2) + random(0, exponential_delay / 2)

- Guarantees at least half the exponential delay

- More predictable but still effective

Decorrelated jitter: delay = random(base, previous_delay * 3)

- Each delay depends on the previous one

- Good distribution without tight correlation to attempt count

Full jitter is generally recommended for webhook retries in order to reduce retry collisions compared to pure exponential backoff.

Multi-Tier Retry Architecture

Real-world failures have different timescales. A network blip resolves in milliseconds. A deployment takes minutes. An infrastructure outage might last hours. A single retry strategy can't optimally handle all of these.

Tier 1: Immediate Retries (Milliseconds to Seconds)

For transient network issues, retry immediately with minimal delay:

- 2-3 retry attempts

- 100-500ms delays

- Catches the majority of connection hiccups

These happen within your delivery worker before the request is considered failed.

Tier 2: Short-Term Retries (Minutes to Hours)

For temporary outages like deployments or brief service disruptions:

- Exponential backoff with jitter

- Delays: 1 min → 5 min → 15 min → 30 min → 1 hour

- 5-10 retry attempts over 2-4 hours

These are scheduled retries, typically managed by your queue infrastructure.

Tier 3: Long-Term Retries (Hours to Days)

For extended outages or maintenance windows:

- Hourly or multi-hour intervals

- Continue for 24-72 hours (or longer, depending on your SLA)

- Lower priority, background processing

Respecting Rate Limits

When you receive a 429 Too Many Requests response, the endpoint is explicitly asking you to slow down. Ignoring this damages your relationship with the customer's infrastructure and may get your traffic blocked entirely.

The Retry-After Header

Many rate-limiting systems include a Retry-After header indicating how long to wait:

HTTP/1.1 429 Too Many Requests

Retry-After: 60

The value can be:

- An integer (seconds to wait):

Retry-After: 120 - An HTTP date:

Retry-After: Wed, 21 Oct 2026 07:28:00 GMT - An ISO 8601 timestamp (less common):

Retry-After: 2026-10-21T07:28:00Z

Always honor this header. If it says wait 5 minutes, wait at least 5 minutes before retrying—regardless of what your backoff algorithm calculates.

When Retry-After Is Missing

If you get a 429 without Retry-After, back off aggressively. Double or triple your normal backoff delay. The endpoint is overloaded, and continued pressure makes things worse.

Circuit Breakers: Protecting Against Cascading Failures

Retries help individual webhooks recover from transient failures. But what happens when an endpoint is down for an extended period? Your retry queue grows, consuming resources. Workers get stuck waiting on timeouts. Other healthy endpoints get starved of delivery capacity.

Circuit breakers solve this by temporarily stopping all traffic to a failing endpoint.

Circuit Breaker States

Closed (normal operation): Requests flow through normally. The circuit breaker monitors failure rates.

Open (endpoint failing): Requests are immediately rejected without attempting delivery. Events route directly to a retry queue. This gives the endpoint time to recover and frees your workers for other endpoints.

Half-open (testing recovery): After a cooldown period, allow a single test request through. If it succeeds, close the circuit (return to normal). If it fails, reopen the circuit and reset the cooldown timer.

Trigger Conditions

Configure thresholds that make sense for your system:

- Open the circuit if 5 of the last 10 requests failed

- Or if the failure rate exceeds 50% over a 1-minute window

- Or if p95 latency exceeds 10 seconds

- Stay open for 30-60 seconds before half-opening

Per-Endpoint Circuit Breakers

Critical: implement circuit breakers per customer endpoint, not globally. One customer's failing endpoint shouldn't affect delivery to other customers.

This is especially important in multi-tenant systems where a single broken endpoint could otherwise degrade service for everyone.

Dead-Letter Queues: The Safety Net

After exhausting all retry attempts, events need somewhere to go. A dead-letter queue (DLQ) captures these failed events for investigation and potential recovery.

What Belongs in the DLQ

- Events that exhausted all retry attempts

- Events that received non-retriable errors (4xx except 429)

- Events that failed validation or had malformed payloads

- Events manually marked as failed after investigation

DLQ Best Practices

Preserve full context: Store the original payload, all delivery attempts with timestamps and responses, the endpoint configuration, and any relevant metadata. You'll need this for debugging.

Categorize failures: Tag DLQ entries by failure type (timeout vs. 4xx vs. 5xx). This helps identify patterns—if you see 100 401 errors from the same customer, their API key probably changed.

Implement alerting: Monitor DLQ size and growth rate. A sudden spike indicates a systemic issue. Set thresholds that trigger alerts before the queue grows unmanageable.

Enable reprocessing: Once the root cause is fixed, you need a way to replay failed events. Support both individual replays (for testing) and bulk replays (for recovering from outages).

Set retention policies: DLQ entries shouldn't live forever. Decide how long to keep them (7-30 days is common) and what happens after—archive to cold storage or delete.

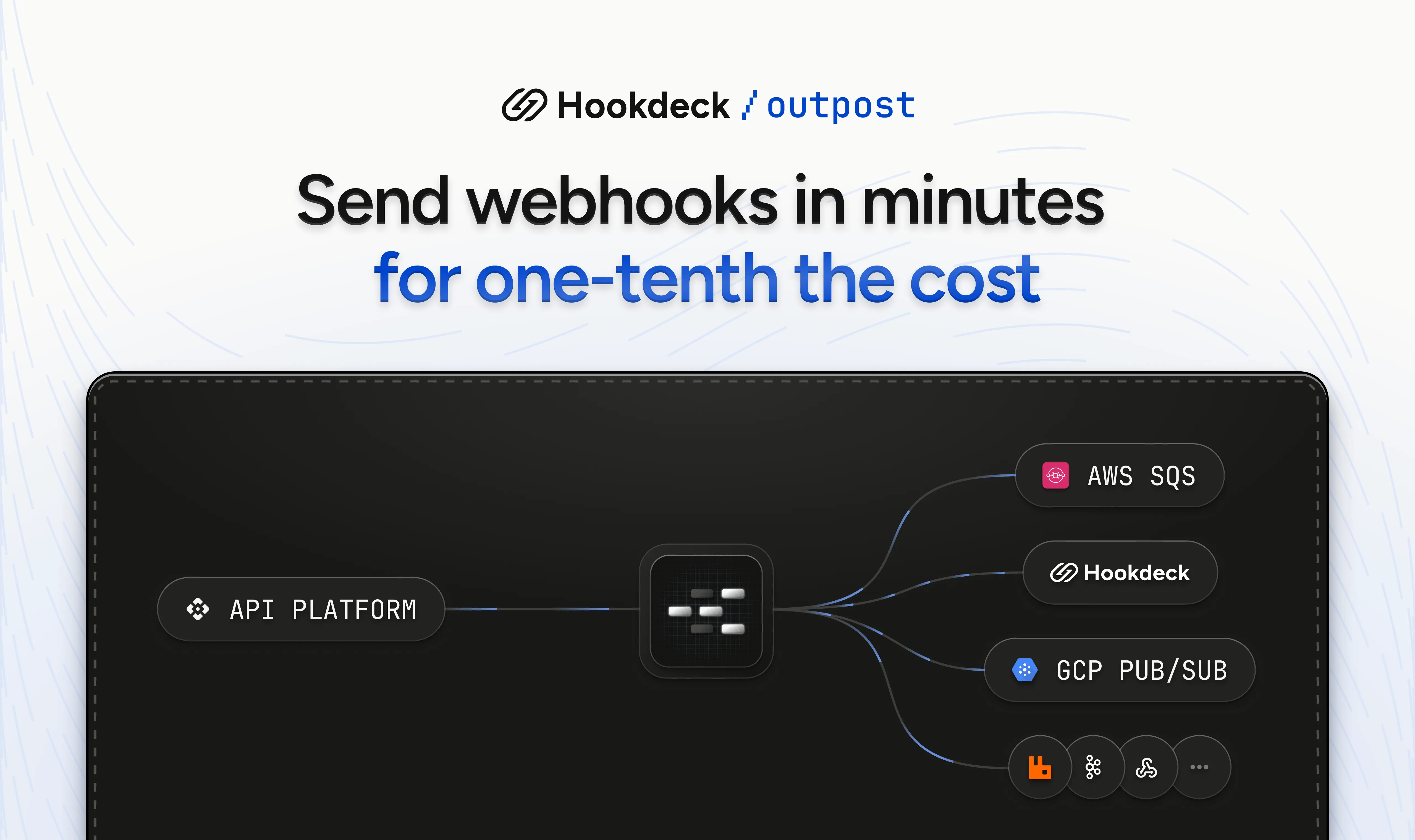

How Hookdeck Outpost Handles Retries

Hookdeck's Outpost is a serverless service that's purpose-built for sending webhooks. It keeps track of each event, its status, and the delivery attempts, implementing an at-least-once delivery guarantee. Here's how our managed solution handles retries:

Automatic Retries

By default, Outpost will automatically retry events if the destination is unavailable, has incorrect configuration, or if the destination returns a non-2xx HTTP response code. Automatic retries are enabled by default, but the retry strategy can be configured using MAX_RETRY_LIMIT and RETRY_INTERVAL_SECONDS environment variables.

The retry interval uses an exponential backoff algorithm with a base of 2.

Manual Retries

Manual retries can be triggered for any given event via the Event API or user portal.

Disabled destinations

If the destination for an event is disabled—through the API, user portal, or automatically because a failure threshold has been reached, then the event will be discarded and cannot be retried.

Getting Started

You can try Hookdeck Outpost's retry capabilities on the free tier. For teams not ready for a managed service, Outpost is also available as an open-source, self-hosted solution.