How to Solve GitLab's Automatic Webhook Disabling

If your GitLab webhooks have mysteriously stopped firing, you've likely encountered GitLab's automatic webhook disabling feature. After just four consecutive failures, GitLab temporarily disables your webhook, and after 40 failures, it's permanently disabled. For a broader overview of the platform's webhook capabilities, see our guide to GitLab webhooks features and best practices. If timeouts are causing your failures, see how to solve GitLab webhook timeout errors. This article explains how GitLab's disabling mechanism works, what you can do to work around it, and why routing webhooks through Hookdeck provides a more reliable long-term solution.

Understanding GitLab's webhook disabling mechanism

GitLab includes a protection mechanism that automatically disables webhooks experiencing repeated failures. The system is designed to prevent your GitLab instance from continuously attempting deliveries to broken endpoints, which could slow down event processing and consume server resources.

Here's how it works:

Temporary disabling after 4 failures: When a webhook fails four consecutive times, GitLab temporarily disables it. The webhook enters a backoff period starting at 1 minute, doubling with each subsequent failure up to a maximum of 24 hours. During this backoff period, GitLab won't attempt to deliver any events to your endpoint.

Permanent disabling after 40 failures: If a webhook accumulates 40 consecutive failures, GitLab permanently disables it. Unlike temporarily disabled webhooks, these won't automatically re-enable, even after your endpoint recovers.

What counts as a failure: GitLab considers a webhook delivery failed when:

- Your endpoint returns a 4xx client error (400, 401, 403, 404, etc.)

- Your endpoint returns a 5xx server error (500, 502, 503, etc.)

- The connection times out (GitLab's default timeout is 10 seconds)

- Any other HTTP error occurs during delivery

Self-healing behaviour: As of GitLab 17.11, all webhooks (regardless of whether they return 4xx or 5xx errors) follow the same self-healing path. After the backoff period expires, GitLab automatically re-enables the webhook and attempts delivery again. If the attempt succeeds, the failure counter resets. This is a change from earlier versions where 4xx errors caused immediate permanent disabling.

Why four failures happens more often than you'd think

The four-failure threshold sounds reasonable until you consider real-world scenarios where it's easily triggered.

Brief endpoint downtime is the most common culprit. If your webhook receiver undergoes a deployment, experiences a brief outage, or restarts due to a crash, four failed deliveries can accumulate in seconds during active development. A single developer pushing multiple commits while your endpoint is restarting could disable your webhook.

Network issues and timeouts contribute frequently. GitLab's 10-second timeout is unforgiving. If your endpoint is slow to respond due to high load, database queries, or external API calls, timeouts count as failures. Network congestion between GitLab and your endpoint can push response times over the limit.

Deployment windows are particularly dangerous. If you deploy your webhook receiver using a rolling deployment strategy, there's a window where requests might fail. Four webhook events during that window, and your webhook is disabled.

Configuration errors during development accelerate the path to disabling. A typo in your endpoint URL, an expired API key, or incorrect authentication returns 4xx errors. While these now follow the same self-healing path as 5xx errors, they still count toward your failure threshold and can quickly accumulate during debugging.

Rate limiting on your endpoint can cascade into webhook disabling. If your endpoint returns 429 (Too Many Requests) during high-activity periods, GitLab counts these as failures, potentially disabling webhooks during your busiest times.

The consequence is that critical integrations, including CI/CD triggers, deployment automation, and notification systems, can go silent without warning.

How GitLab's backoff and self-healing works

Understanding GitLab's backoff behaviour helps you plan recovery strategies.

When a webhook is temporarily disabled, GitLab implements an exponential backoff:

- First disable: 1 minute backoff

- Subsequent failures: Backoff doubles each time (2 min, 4 min, 8 min, etc.)

- Maximum backoff: 24 hours

After the backoff period expires, GitLab automatically re-enables the webhook and attempts delivery again. If this attempt succeeds, the webhook returns to normal operation and the failure counter resets. If it fails, the webhook enters another backoff period with an extended duration.

This self-healing mechanism means webhooks can recover automatically once your endpoint is healthy again. However, the escalating backoff means you might miss events for extended periods. A webhook that's been failing intermittently could be disabled for 24 hours at a time, and all events during that window are permanently lost.

Temporary workarounds

Re-enabling webhooks manually

To re-enable a disabled webhook:

- Navigate to your project or group's Settings > Webhooks

- Find the disabled webhook (marked "Disabled" or "Temporarily disabled")

- Click the Test dropdown and select an event type

- If the test request returns a 2xx response, the webhook is re-enabled

You can also re-enable webhooks via the API:

# Trigger a test request to re-enable

curl --request POST \

--header "PRIVATE-TOKEN: <your_access_token>" \

"https://gitlab.example.com/api/v4/projects/:id/hooks/:hook_id/test/push_events"

Limitation: This only works if your endpoint is currently healthy. You can't re-enable a webhook while the underlying issue persists.

Monitoring webhook status

Proactively monitor your webhooks to catch disabling before it impacts your workflows:

# List all webhooks and their status

curl --header "PRIVATE-TOKEN: <your_access_token>" \

"https://gitlab.example.com/api/v4/projects/:id/hooks"

Look for the disabled_until field in the response, which indicates when a temporarily disabled webhook will be re-enabled.

Limitation: This requires building monitoring infrastructure and doesn't prevent the disabling from happening.

Implementing faster response times

Since GitLab's 10-second timeout is a common cause of failures, optimize your endpoint to respond quickly.

Limitation: This addresses timeout-related failures but doesn't help with endpoint downtime, deployment windows, or network issues.

High availability infrastructure

Deploy your webhook receiver across multiple availability zones with load balancing:

- Use health checks to route traffic away from unhealthy instances

- Implement graceful shutdown during deployments

- Consider serverless functions (AWS Lambda, Google Cloud Functions) for automatic scaling

Limitation: Adds significant infrastructure complexity and cost. Still vulnerable to regional outages, configuration errors, and the 4xx permanent disable behaviour.

For self-managed GitLab: Adjusting the feature flag

On self-managed GitLab instances, administrators can disable the auto-disabling feature entirely using the Rails console:

# In Rails console (flag name may vary by GitLab version)

Feature.disable(:auto_disabling_webhooks)

Limitation: This removes all protection against runaway webhook failures, potentially impacting GitLab performance. Only available for self-managed instances, not GitLab.com.

Why these workarounds fall short

While these workarounds help, they share fundamental limitations:

No protection during outages: If your endpoint is down, events are lost. GitLab doesn't queue failed webhooks for later delivery. See What Causes Webhooks Downtime and How to Handle the Issues for general strategies.

Manual intervention required: Re-enabling permanently disabled webhooks requires human action or custom automation. There's no built-in way to automatically recover.

Events lost during disable periods: While your webhook is disabled (potentially for up to 24 hours), all events for that webhook are silently dropped. There's no way to recover them.

4xx errors are unforgiving: A single configuration error can permanently disable your webhook, requiring manual intervention even for transient issues like expired tokens.

A better solution: Hookdeck

Hookdeck is webhook infrastructure that sits between GitLab and your endpoints, providing the reliability features that GitLab lacks natively. Instead of pointing your GitLab webhooks directly at your application, you point them at Hookdeck, which manages delivery to your actual endpoint.

How Hookdeck solves the disabling problem

GitLab always sees success. Hookdeck responds to GitLab within 200 milliseconds, well under the 10-second timeout. Your failure counter never increments because GitLab's delivery to Hookdeck always succeeds. Even if your actual endpoint is down for hours, GitLab continues sending webhooks normally.

Automatic retries with exponential backoff. When your endpoint fails to respond with a 2xx status, Hookdeck automatically retries. You can configure up to 50 retry attempts spread across up to a week. Temporary downtime no longer means lost events.

Events are never lost. Hookdeck queues all incoming webhooks. If your endpoint is unavailable, events wait in the queue until delivery succeeds. No more silent event loss during outages or deployment windows.

Extended retry window. While GitLab might disable your webhook after a few minutes of failures, Hookdeck continues retrying for days. Your weekend deployment that accidentally breaks the endpoint? Hookdeck holds those events until Monday when you fix it.

Bulk retry for recovery. If multiple deliveries fail during extended downtime, you can retry them all at once after your endpoint recovers. No need to manually reconcile missed events.

Setting up Hookdeck with GitLab

The integration is straightforward:

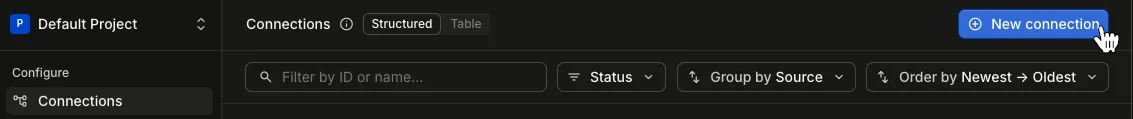

- Create a Hookdeck account and set up a new Source for GitLab

- Copy the Hookdeck URL provided for your Source

- Configure your GitLab webhook to point to the Hookdeck URL instead of your endpoint directly

- Set up a Connection in Hookdeck to route events to your actual endpoint (Destination)

- Configure retry rules and alerting to match your requirements

Once configured, GitLab delivers to Hookdeck (which always succeeds), and Hookdeck manages reliable delivery to your application.

Additional benefits

Beyond solving the disabling problem, Hookdeck provides:

Comprehensive observability. Hookdeck logs every webhook with full request and response data. See exactly which events arrived, which are pending retry, and which failed. GitLab's native logging only retains 2-7 days; Hookdeck retains up to 30 days.

Signature verification. Hookdeck validates GitLab's secret token at the edge, ensuring only legitimate events reach your endpoint.

Rate limiting and traffic shaping. Protect your endpoint from webhook floods during high-activity periods. Hookdeck delivers events at a rate your infrastructure can handle.

Deduplication. Filter duplicate events before they reach your endpoint, reducing processing overhead.

Transformations. Modify webhook payloads before delivery, normalizing data or filtering fields you don't need.

Local development. Route webhooks to localhost for development and testing without exposing your local machine to the internet with Hookdeck CLI.

Conclusion

GitLab's automatic webhook disabling protects the platform from runaway failures. But the four-failure threshold is easily triggered, the escalating backoff can disable webhooks for up to 24 hours, and events during disabled periods are permanently lost.

Workarounds like faster response times, high-availability infrastructure, and proactive monitoring help but don't address the fundamental problem: GitLab doesn't retry failed deliveries or queue events during outages.

For reliable GitLab webhook integrations, route your webhooks through Hookdeck. GitLab always sees successful deliveries, your failure counter never increments, and Hookdeck's automatic retries and event queuing ensure no events are lost. What could be a fragile system becomes a robust foundation for your development automation.