How to Take Control of Your Webhook Reliability

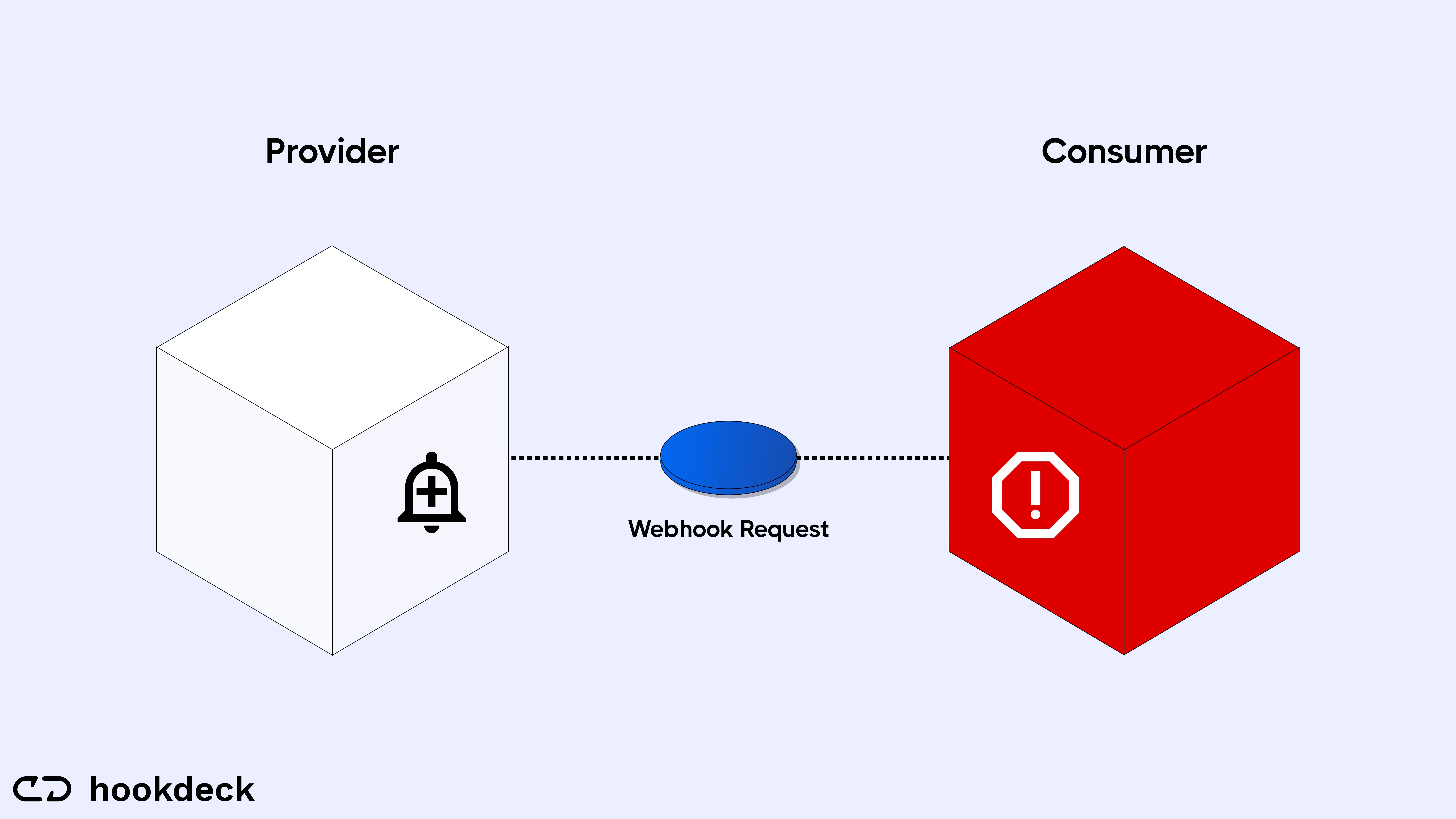

The growing rate of integrations of SaaS applications (Stripe, Shopify, etc.) with custom applications has resulted in the inclusion of webhooks in most software infrastructures. In their purest form, webhooks are simple HTTPS requests and thus are prone to the reliability issues that come with networked applications transferring data between one another. There is also the issue of scaling infrastructure to handle the volume of webhooks expected.

In this article, the first in a series of webhook reliability guides, we will discuss the root causes of failures experienced when handling webhooks. We will also explore solutions and guide you when picking the right solution to make your webhooks reliable.

Webhooks are popular, but very brittle

As mentioned earlier, the rate of SaaS integrations is on the rise because services like Stripe, which offer payment services, and Shopify, which provides e-commerce services, are now being used to handle common functions required by most modern applications.

Webhooks mostly facilitate these integrations; it's almost impossible to build or integrate with a SaaS application today without webhooks.

However, webhook's natural design does not complement this growing dependence with reliability features. Webhooks are brittle, meaning they are prone to failures that can come as a result of several reasons, such as:

- Network failure

- Bad requests

- Authentication failure

- Buggy deployments

- Request timeouts

- Webhook request duplication

- …and more

Because webhooks are critical to the operational success of our integrations, we need to start paying more attention to making them reliable.

To learn more about the cost of a failed webhook and why teams need to start paying more attention to delivering webhooks reliably, check out our article “Why You Should Be Paying More Attention to Your Webhooks”.

How to design for webhook reliability

To begin designing for reliability, first we need to have the right mindset about webhooks.

Because webhooks originate from providers like Shopify and Stripe, and these providers also make available some features that provide some resilience mechanism, we actually recommend that you allow providers to do what they do best. Allow them to send your webhooks while you take full responsibility in making sure they are reliably consumed.

Webhook providers optimize their reliability in sending you webhooks rather than your reliability in receiving them. This strategy makes sense for providers because they are trying to get your webhooks to you as fast as possible, which is their sole responsibility. Also, providers cannot determine which webhooks are more important to you; thus, they try to get your webhooks to you in time while you handle the prioritization mechanisms.

Knowing this, you have to be responsible for ensuring your webhook delivery is reliable. The reliability of your webhook consists of two attributes: resiliency and observability.

Resiliency covers the ability of your webhook to be (eventually) delivered despite failures and the scalability of your webhook infrastructure.

On the other hand, observability is the feedback mechanism that needs to be put in place to ensure adequate visibility into the journey of your webhooks from source to destination.

Owning these two reliability pillars helps you deliver all your webhooks, scale under increasing load, observe your webhook trace and patterns, and set up actionable alerts when things go wrong.

To learn more about the role of webhook providers, why you cannot rely on them for webhook resilience, and the features of a reliable webhook pipeline, check out our article ”Why Relying on Webhook Providers Is Not the Answer”.

Webhook reliability is about resilience and observability

In the previous section, we introduced resiliency and observability as the core attributes of a reliable webhook delivery solution. But how do we implement these? How do we go about adding resilience and observance features to our webhook delivery pipeline?

The first step is to understand that while webhooks on the surface are HTTP requests, HTTP is a wrapper for the event information that the webhook aims to communicate.

Thus, while it feels natural to handle webhooks like typical HTTP requests, from an architectural point of view, webhooks are simply events, and the recommended strategy is to use asynchronous processing.

The event-driven architecture is designed to handle events and is built around the asynchronous processing concept.

So, all the buzzwords asides, what practically needs to be done to implement asynchronous processing? You need to add a queue.

Message queues

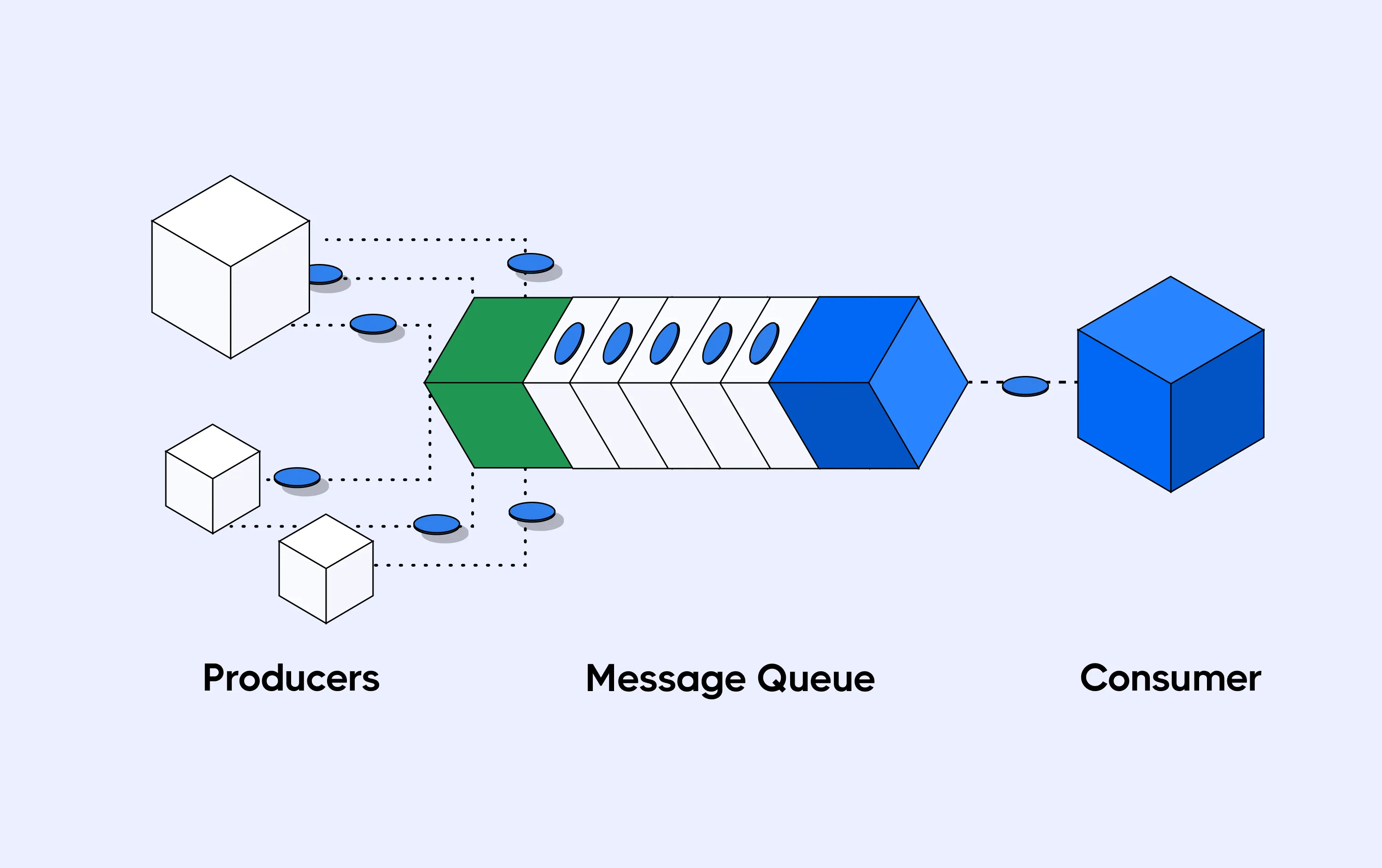

A queue is a message broker that can be added between your webhook provider and consumer to deliver webhooks asynchronously. Once added, a queue buffers messages from the webhook provider and serves them to a consumer or pool of consumers at a controlled rate.

A queue is the easiest way to implement asynchronous processing in your webhook delivery. Its addition results in the following:

- Asynchronous processing of webhooks (avoid timeouts)

- Ability to recover from errors with retries

- Request throttling and rate limiting

- Batch recovery

- Webhook workload distribution for scalability

- …and more

To learn more about the asynchronous processing approach and the ROI of using a queue within your webhook infrastructure, check out our article ”Why You Should Stop Processing Your Webhooks Synchronously”.

However, note that a queue only takes care of the resiliency aspect of reliability. You will still need to instrument your webhook infrastructure for good observability.

Comparing solutions and stacks

Now that we understand that queues help take care of the resiliency aspect of reliable webhook delivery, let’s examine the cost of implementation.

Choosing a solution

First we have to choose a queueing solution, and there are two main approaches to this: open-source or managed queue service.

Examples of open-source queuing technologies include Apache Kafka and RabbitMQ, while managed queue services like Amazon SQS and Google PubSub are top-rated options.

Open-source options have the advantage of being very flexible; however, they have a steep learning curve and require a certain level of proficiency to implement for optimal results.

On the other hand, managed queue services take the burden of implementation and deployment off your hands but can be challenging to customize and are restricted to a fixed set of features.

Reliability requirements

The next step after picking a queuing solution is to implement the reliability requirements of webhooks around the queue. While some queues may already possess some of these features, the absent features need to be developed/added. Requirements include:

- Alerting and logging

- Ingestion runtime

- Consumer runtime

- Monitoring

- Custom scripts (for custom workflows like dead-lettering retries, webhook authentication, protocol conversion, etc.)

- Developer tooling

Making sure all these requirements are present can take weeks to months to implement, test, and stabilize. There is also the added cost (financial and human resources) of maintenance.

For a detailed breakdown on choosing a queueing solution and the requirements of a resilient webhook infrastructure with observability features, check out our article “How to Choose a Solution for Queuing Your Webhooks”.

Introducing Hookdeck for webhook reliability

As seen in the previous section, it's quite challenging setting up a reliable webhook delivery pipeline with all the resiliency and observability features required.

Developers and teams want to integrate with SaaS applications and not spend precious man-hours developing and maintaining a webhook infrastructure.

Imagine the boost in efficiency that teams will receive if there is already a plug-and-play reliable webhook solution with quick deployment.

Well, look no further because what we just described above is Hookdeck.

Hookdeck is the queue for the modern stack as it delivers all the reliability features we have been discussing so far (and even more). The best part is that you can test, deploy, and troubleshoot your integrations in a matter of minutes, resulting in the quickest time to value for implementing reliability into your webhooks.

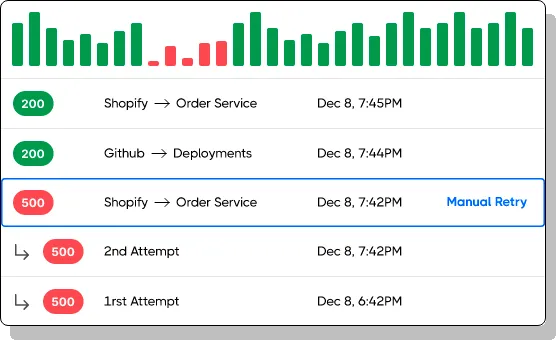

What Hookdeck offers as far as resilience

- A reliable and scalable queuing solution

- Webhook delivery rate limiting

- Automatic and manual retries for failed webhooks

- Bulk retries for failed webhooks

- Hookdeck CLI for testing and troubleshooting webhooks

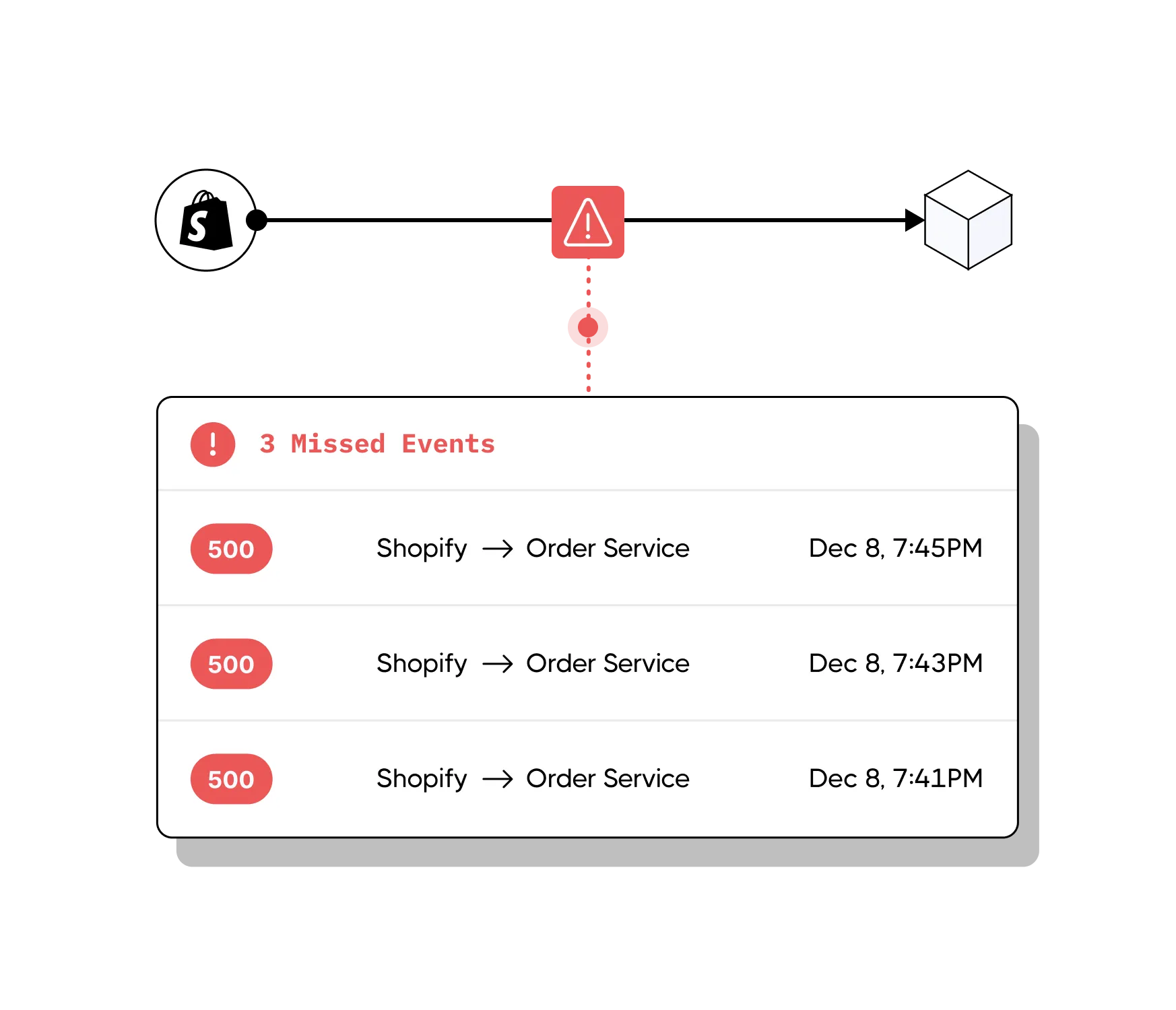

What Hookdeck offers as far as observability

- Alerting and logging

- Webhook trace monitoring from source to destination

- Historical webhook patterns with querying tools

- Visibility into the webhook payload, headers, and timestamps

- Issue tracking

With Hookdeck, missing a webhook is now in the past. To learn more about how Hookdeck delivers on this promise and all the features that help boost your developer experience working with webhooks, read our article, ”Hookdeck - The Modern Queueing Solution for Your Webhooks”.

Conclusion

In this article, we have discovered that webhook failures are a symptom of a lack of resilience and observability. To be in control, you can’t rely on providers but must instead equip yourself with a (modern) queue that allows you to test and reliably have integrations in production in a fraction of the time and complexity.

That queuing solution is Hookdeck, and you can start with a free trial today.

Happy coding!

Gain control over your webhooks

Try Hookdeck to handle your webhook security, observability, queuing, routing, and error recovery.