Why You Should Stop Processing Your Webhooks Synchronously

In the previous article, we concluded that we need to build resiliency and observability features around our webhooks. We also discussed how event-driven architecture best fits resilient traits into the webhook infrastructure pipeline.

This article will further explain why the event-driven architecture style is the answer to solving our webhook resiliency problems, how we can achieve this architecture with queues, and the pain points queues help us remove.

Why asynchronous processing is recommended when dealing with events

A webhook wraps an event in an HTTP request to communicate it to another remote application or component, which makes it feel natural to treat the webhook in the typical request-response (client/server) communication style.

However, from an architectural perspective, the recommended approach to processing is the asynchronous style of the event-driven architecture. Asynchronous processing implements a acknowledge first, process later style of handling webhooks that allows you to scale your webhooks under heavy workloads and handle errors more gracefully.

If you're new to the asynchronous processing style or need a primer, you can check out our article “Introduction to Asynchronous Processing”.

To bolster this assertion, let's look at the experience of treating webhooks using the typical request-based model’s synchronous approach compared to the event-driven architecture’s asynchronous approach.

Request timeouts

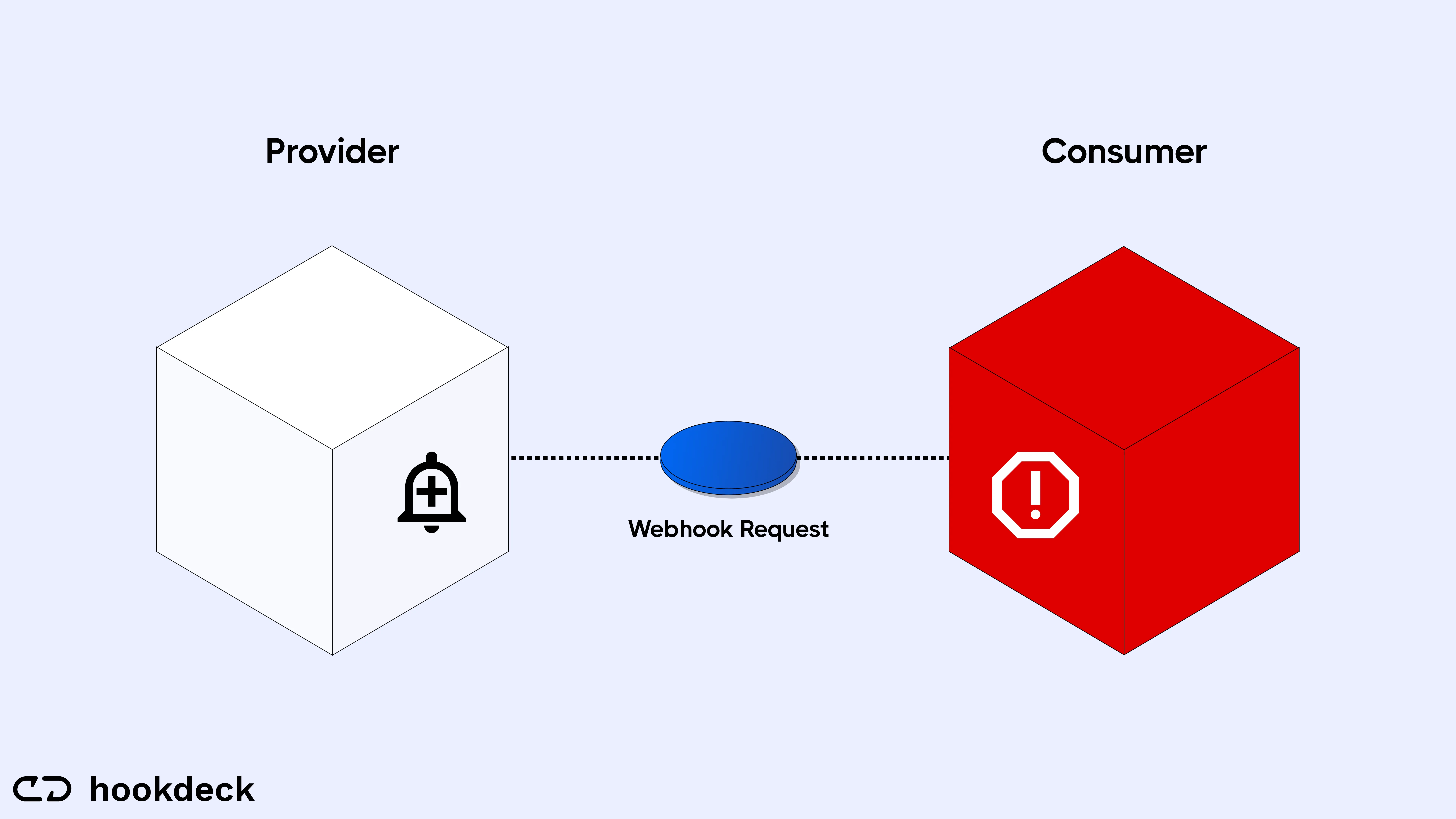

HTTP requests are expected to be processed before a response is sent. If the response time limit is exceeded, the webhook request will time out. When this happens the webhook provider will assume that the webhook has failed and eventually drop it.

Synchronous approach

In the synchronous client/server approach, the webhook provider has to wait for the webhook to finish processing before it gets a response.

Response time = Request latency + Processing time

This greatly increases the response time and increases the potential of dropping a webhook.

Asynchronous approach

However, in an event-driven architectural style, events are immediately acknowledged and are then queued to be processed when the consumer is ready. Thus, response time becomes:

Response time = Request latency

This allows consumers to respond to producers as fast as possible, drastically reducing the possibility of timeouts.

Back pressure

Back pressure occurs when there are so many requests happening at the same time that it exceeds the server's capability.

Synchronous approach

This situation causes the connection pool to be saturated and the request queue grows, leaving newer requests open to the risk of being dropped due to long response times.

Asynchronous approach

In an event-based system, requests do not run the risk of being dropped because the webhook producer has already received a response immediately after the consumer acknowledged the webhook.

Failure recovery

This is the ability to recover from a fault within the architecture when sending and processing webhooks.

Synchronous approach

In a typical HTTP request-based model, the client shares a substantial part of the responsibility of ensuring a request is successfully processed. Thus, when a request fails, the client will retry the request or send a notification that can trigger a retry. The client, human, or machine initiating the request is aware of the importance of the request.

However, in a fire-and-forget style of webhook communication, the provider that fires the webhook prioritizes delivery over error recovery.

Asynchronous approach

Event-driven architecture addresses resiliency and prioritizes proper error handling and recovery by temporarily persisting events until they are successfully processed.

Data loss

This is a situation where the information about an event can no longer be recovered due to system failure.

Synchronous approach

When a request fails in a typical HTTP request-based model, the server has no recollection of the request. The only way to get the information is if the client retries the request. If the request is not retried and successfully processed, it can be lost forever.

Suppose the success of the request is the difference between having a happy returning customer or a frustrated one that’s agitated. In that case, such data loss makes it almost impossible to rescue the situation.

Asynchronous approach

The asynchronous style of the event-driven architecture allows the temporary persistence of event data until the event is successfully processed.

This ability helps you recover from transient faults that may lead to damaging impacts if not rescued.

In order to understand why event-driven architecture is so helpful when handling webhooks, we must first understand how webhooks are events.

Webhooks are events

So far in this series, we have consistently emphasized that webhooks help carry information about an event from one remote application or component to another.

Because a webhook signals the event's occurrence and contains data about the event, the webhook represents the event itself.

Thus, even though webhooks wrap the event in an HTTP request, it is best to treat the webhook as an event.

Let’s take a look at the similarities between typical events and webhooks.

| Events | Webhooks |

|---|---|

| Fired when a significant action takes place and needs to be communicated | Triggered when a subscribed event takes place in an application to communicate it to the subscriber |

| Can be subscribed to by listeners | Can be subscribed to by remote integrations |

| Don’t expect an acknowledgment or response | Expect an acknowledgment but don’t depend on it |

| Expect an acknowledgment but don’t depend on it | Function primarily as a notification about an event and can contain data about the event |

| Can be fired multiple times for the same event | Can be fired multiple times for the same event |

| Sent as an event object | Sent as an HTTP request wrapping an event object |

Sharing so many similarities, it is clear that webhooks are simply events with a different conduit pipe. And as such, it makes sense why an architectural style built to handle event-driven applications is best suited for processing webhooks.

Why you should start using queues for your webhooks

One of the significant advantages of the event-driven architectural style, besides the fact that it introduces asynchronous processing to your handling of webhooks, is that it can be used as a standalone architecture style or embedded in an existing architecture.

One of the quickest ways to implement this architecture, especially in existing infrastructures, is through a queueing system.

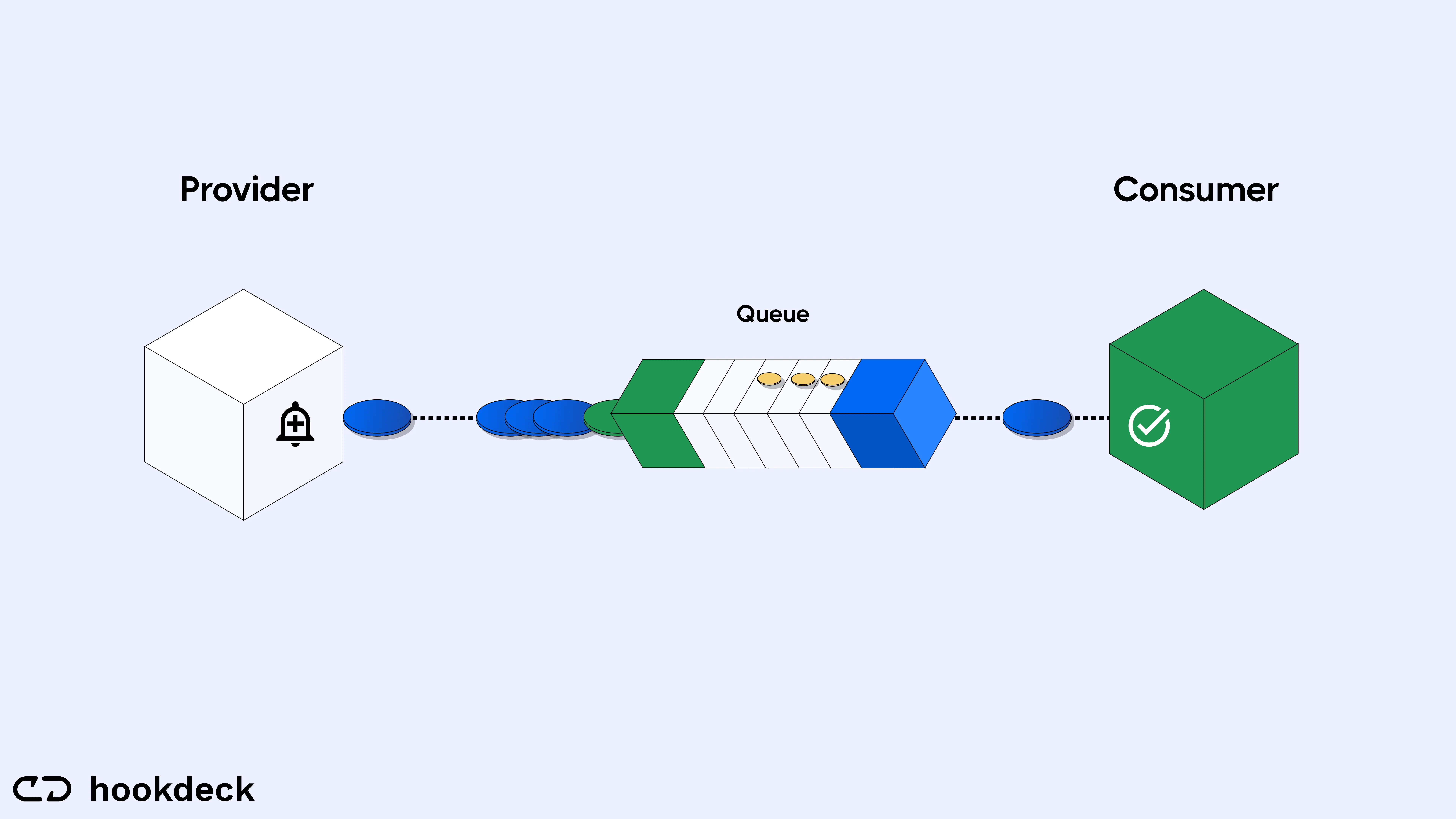

Inserting a queue between your webhook providers (Stripe, Shopify, etc.) and your server instantly introduces asynchronous processing into your infrastructure. It does this by acknowledging your webhooks after receiving them, buffering them in temporary storage, and serving them to your consumer at a rate it can handle.

Whether it's open-source queueing technologies like Kafka and RabbitMQ or managed queueing services like Amazon SQS and Google Pub/Sub, queues help implement the resiliency features that event-driven architectures promote.

Let’s look at some of these features and how queues help us achieve them.

Asynchronous processing

One of the quickest ways to implement asynchronous processing is by adding a queue between your webhook provider and consumer.

A queuing system acknowledges the webhook request and immediately adds it as a message to the queue. The consumer can then pick up this message and process it.

Webhook retry

Sometimes failure is encountered when a consumer tries to process a webhook. These failures can be due to transient faults like server crashes or issues requiring a fix.

A queue helps persist the webhook message so the consumer can pick it up again for processing when the issue is fixed.

Request throttling/Rate limiting

One of the valuable features of queues is the ability to serve requests to a consuming server within the server's capacity. Queues can also perform health checks on their consumers and re-route or persist messages when they detect that a particular consumer is down. This helps you avoid server crashes due to overload.

You can also configure queues to serve messages within a measured threshold that ensures that consumers are not overloaded.

Batch recovery

Some failures can cause a large number of webhooks to fail at the consumer end. With queues, all these webhooks are persisted, and once the issue with the consumer is fixed, the consumers automatically pick up the webhooks and process them.

Based on the points discussed above, it is clear that a queue is an essential component when it comes to bringing reliability into the infrastructure built to handle webhooks. All we need to do is place a queue between our webhook provider and consumers and all the resiliency features will become available…but wait, not so fast.

Queues are not so easy to implement and just like any other new component you bring into your infrastructure, they add an extra layer of complexity. You first need to choose a queueing technology that best fits your setup, and based on your choice, you might need to build some custom features around it to check all the boxes on resiliency and observability best practices.

Conclusion

In this article, we have established the need to process our webhooks asynchronously to make them resilient.

We also touched on the queues and their role in implementing an event-driven pattern through asynchronous processing.

The next article will focus on the features you need to look out for when choosing a reliable queueing solution. We discuss the pros and cons of existing solutions, as well as how to pick the one that best suits your use case. We will also introduce the observability side of things and walk you through how to pick the best solution for your use case.

Happy coding!

Gain control over your webhooks

Try Hookdeck to handle your webhook security, observability, queuing, routing, and error recovery.