Webhook Infrastructure Components and Their Functions

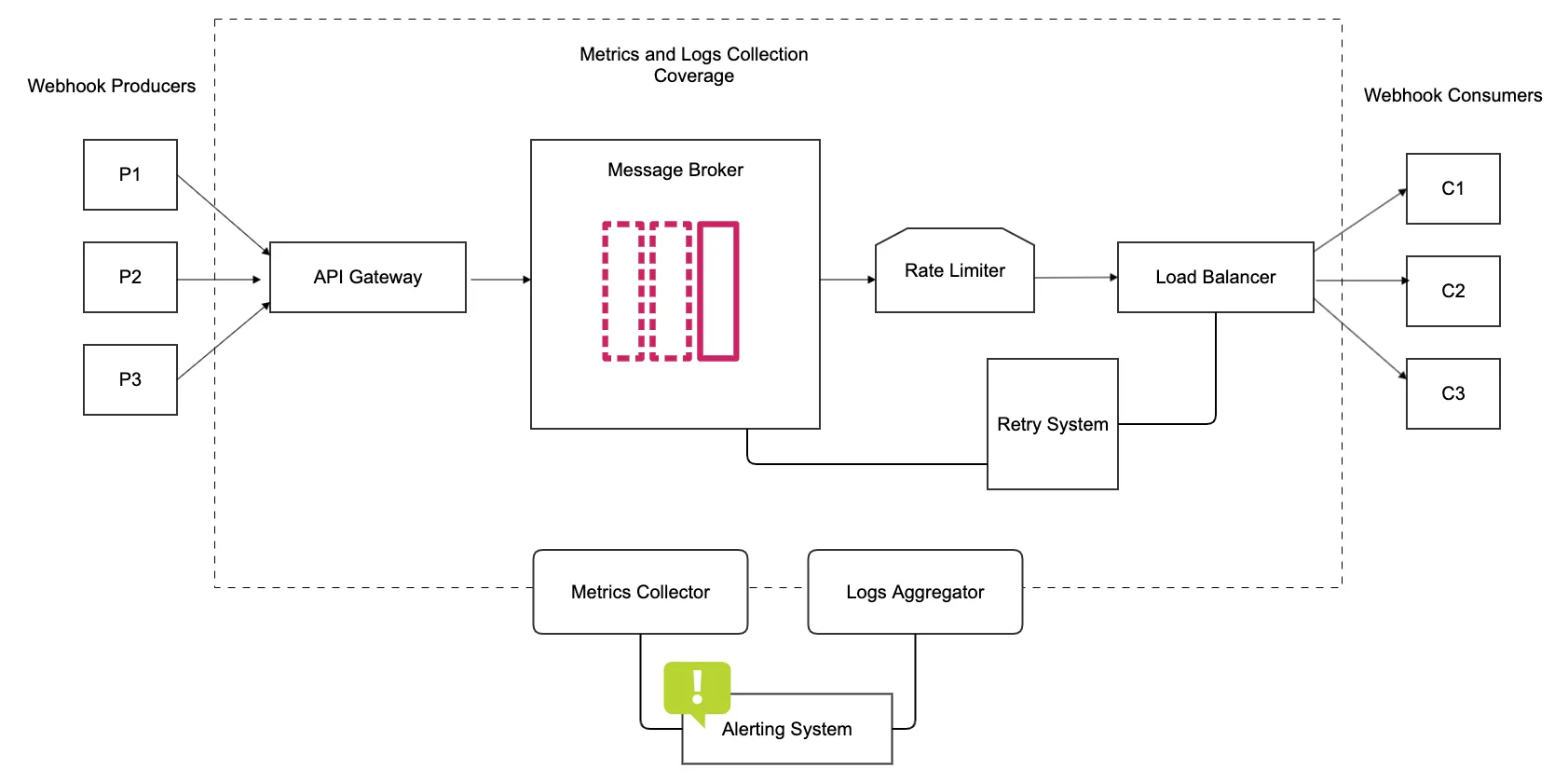

In a previous article in this series, we looked at the requirements for a reliable webhook infrastructure from a stakeholder’s perspective. We then took the problem statement and broke it down into operational requirements for a webhook handling system that is reliable, fault-tolerant, and highly performant. Lastly, we proposed an architectural blueprint for the solution.

This article follows up on the previous article by taking a close look at each component in the proposed architecture. We define each component’s purpose, implementation options, design, and scalability considerations.

Finally, we introduce the topic of performance for each component and how it factors into the overall performance of the system.

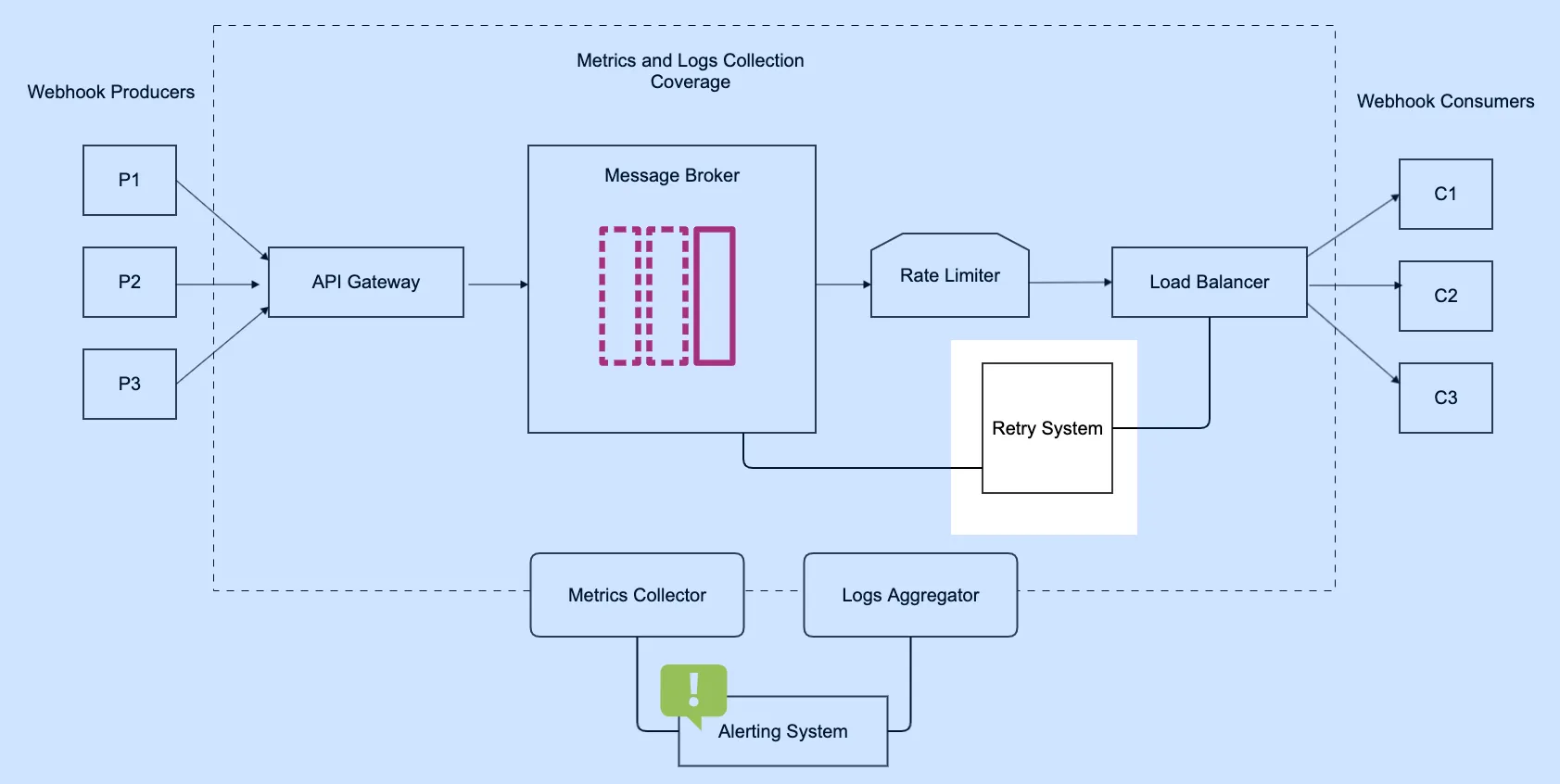

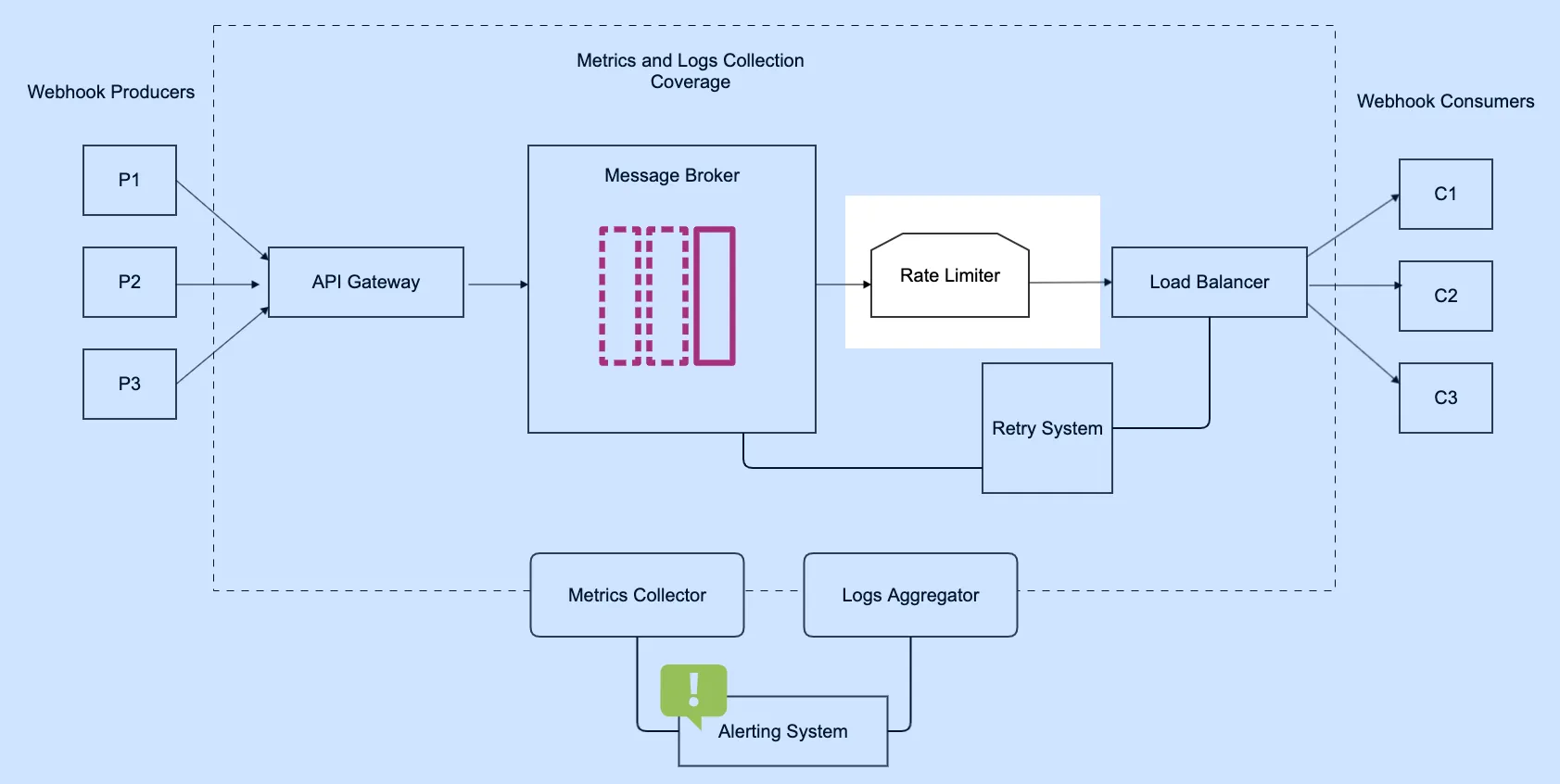

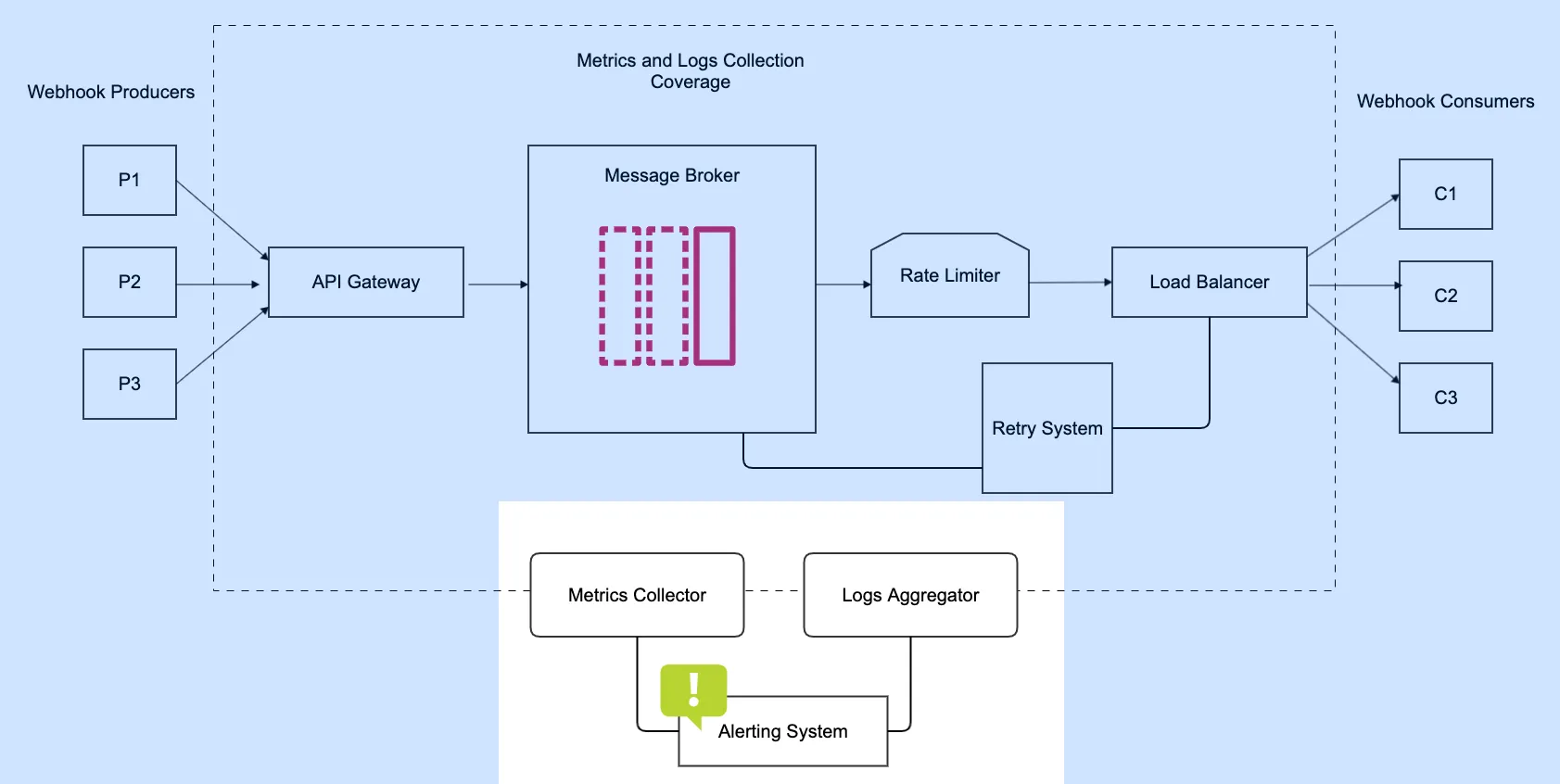

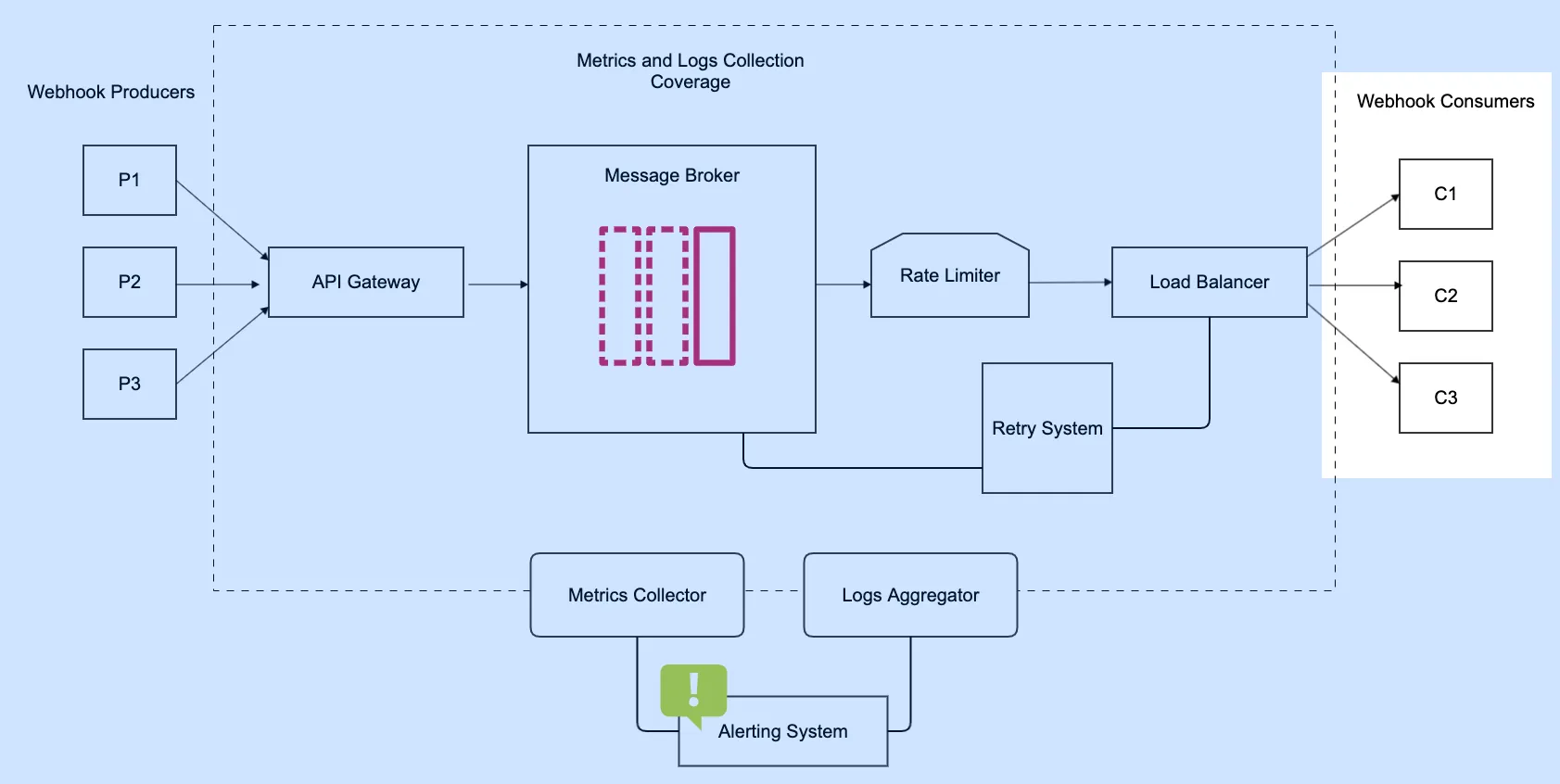

Review of the proposed webhook infrastructure design

To begin, let’s bring back our proposed solution and then walk through the architecture component by component.

To recap, here is a summary of the workflow of the proposed solution:

- Webhook producers trigger webhook requests and send them to an API gateway.

- The API gateway adds the webhook messages into a message queues in the message broker.

- The message broker buffers the webhook messages to process them asynchronously and routes them to a pool of consumers.

- A rate limiter sits between the message broker and pushes webhook messages to consumers at a rate that they can handle.

- When processing fails, dead letter queues collect failed messages to be replayed by a retry system.

- Metrics and logs are collected throughout the system components.

- Metrics and logs are used to set up alerts for cases where administrators need to take action.

Now that you’re all caught up with the proposed solution, let’s begin our breakdown. Just like the analysis in the previous article, our breakdown of components will also be a discovery process to understand what works and what doesn’t.

This means that our proposed design is subject to change based on new information discovered as we analyze each component in the system.

The webhook producer

The first component in the system is the webhook producer, sometimes referred to as the webhook publisher. This is the source of the webhook request and sends webhooks using the HTTP protocol, though most third-party webhook providers like GitHub, Okta, and Shopify use (and enforce) the secure HTTPS protocol.

Most webhook producers use a fire-and-forget style of messaging. This means that they do not intend to wait for the processing of the webhook to complete and often enforce a timeout limit on the response time from the consumer.

The majority of webhook producers are third-party SaaS applications like GitHub and Shopify. However, you can also produce webhooks within your custom applications for internal use or integration with external applications.

Function within the system

Webhook producers initiate or trigger the asynchronous processing operation. The main responsibility of producers is to create valid messages to be sent to the message broker via the API gateway.

The term “valid” in this context means that the message contains all the necessary information for the webhook to be processed, and it’s also in the correct format to be received by the API gateway. Producers target the API gateway for their messages to be added to the message broker.

To handle webhooks from producers (external or internal) effectively, certain factors need to be taken into account. These factors impact how webhook processing is done, the success rate of processing webhooks, and the overall reliability of the system. Check out our article “Implementation Considerations for Webhook Producers in Production Environments” for more information.

The API gateway

An API gateway interfaces HTTP with other protocols and applications. This helps HTTP clients to interact with other applications without needing to know other protocols.

Function within the system

The main function of the API gateway in this architecture is to serve as a protocol converter between the webhook producers (HTTP clients) and the message broker. Message brokers like RabbitMQ use a binary protocol and thus cannot directly accept HTTP requests without using a proxy.

Our API gateway will speak HTTP on the side of the webhook producer, and speak the protocol of the message broker on the other side. This way, the gateway serves as a message relay parsing HTTP requests to be ingested by the broker.

Another function of the API gateway is to serve as a security accelerator. Security accelerators help receive and decrypt secure HTTPS traffic by translating it to normal HTTP traffic.

Sometimes gateways make use of special decryption software/hardware to decrypt secure traffic more efficiently than the origin server, ultimately removing the load from the origin server (in this case the webhook consumer).

Our API gateway will also be adding a Trace ID to each webhook request for logging and tracking purposes. The Trace ID is a special string identifier that will be reflected and searchable in all the logs as the request moves through each component within the architecture.

The decryption and tracing functions will be performed before the protocol conversion in the API gateway.

Implementation options

An API gateway is simply a proxy server for pre-processing and routing traffic to one or more services. There are various server software and cloud services that can be configured and deployed to serve this purpose. These include:

- Nginx: A widely used load-balancer and reverse proxy server application

- Amazon API Gateway + AWS Lambda combo

You can also use application servers of numerous programming languages to receive, process, and relay your webhook requests to the message broker.

Just like the webhook producer, the API Gateway for transforming and sending webhook messages to a message broker also has some recommended best practices for using it in a production environment.

The webhook message broker

A message broker buffers and distributes messages asynchronously. Simple message queueing systems can be built out of flat files, a custom component backed by a database, etc. However, message brokers combine all the functions needed for a reliable and full-featured asynchronous processing system.

Message brokers are also optimized for high concurrency and high throughput because the ability to enqueue messages fast is one of their key responsibilities.

This message-oriented middleware is deployed as a standalone application and is scaled independently.

Function within the system

This is the heart of the asynchronous processing functions of the architecture. It is the component that takes care of accepting, routing, persisting, and delivering webhook messages. It is also the key component for decoupling webhook producers from the consumers.

When the API gateway receives a webhook from a producer, it converts the message to the broker’s protocol (e.g. AMQP or STOMP). The gateway then adds the webhook message to the message broker and sends a response back to the producer once it receives an acknowledgment from the broker that the message has been added.

As the message is being added to the broker, the gateway also adds the routing key for the message. This key enables the broker’s routing system to add the message to the appropriate message queue (or topic). Consumers subscribed to the queue can then pick up the messages and process them accordingly.

When more than one subscriber is subscribed to a queue, the message broker performs the function of load-balancing the message-processing task amongst the subscribed consumers (which means we may not need a separate load balancer component for our webhook consumers after all).

Implementation options

Brokers are software applications like databases and web servers and usually do not require any custom code; they are configured, not customized.

Message brokers can be deployed using open source technologies or cloud providers. RabbitMQ and Apache Kafka are two of the most popular message broker software today, and some cloud services offer hosted versions of these technologies. They are also available as containerized applications, making them easy to spin up in a cloud-native environment.

To use these open-source options, you need a good understanding of how the technology works. You need to be familiar with how it routes messages, handles permissions, and scales with an increased workload, as well as general performance considerations.

Another option you can look into for setting up a message broker is via a cloud service provider. Cloud providers like Amazon Web Services, Google Cloud Platform, and Azure have various message queueing offerings you can subscribe to.

AWS has Amazon Simple Queue Service (SQS), GCP has Cloud Pub/Sub, and Azure has a suite of messaging services.

One advantage of cloud providers handling your queueing operations is it’s quicker to set up. Also, the learning curve is less steep, and they often take care of all your scalability, performance, and monitoring needs (often at a price though).

The webhook retry system

Transient faults are common in today’s cloud architectures. These include a momentary loss of network connectivity to components and services, timeouts arising from busy services, or the temporary unavailability of a service.

Because these faults are temporary, the system needs to be designed to handle them and self-heal from the effects. A retry system is one of the features that can be built into your architecture at failure-prone points to ensure that any operation that fails is retried.

Function within the system

The retry system in our architecture sits between the webhook consumers and the message broker. When the consumer raises an exception while processing a webhook, the retry system receives the error and determines if and how the webhook request will be retried.

If the fault is transient, the retry system helps re-queue the request in the message broker or add it to the broker’s dead-letter queue, depending on the type of error. Webhook messages can then be retried after a delay with the assumption that the issue that caused the failure will have been resolved.

If necessary, this process will be repeated, and the delay period will be increased with each attempt until a maximum number of requests have been attempted. Delay values can be increased depending on the nature of the failure and the probability of correcting the failure during the delay period. This increase can either be incremental or exponential.

Implementation options

Unlike other components discussed so far, a retry system is not an off-the-shelf component. While message brokers have features that support retrying failed operations during message consumption, you will most likely have to build a custom retry system based on your needs. For example, in this article, Uber takes advantage of Kafka’s dead-letter queues to develop a reliable retry system.

Cloud providers also offer services that help you configure request retries to your services. For example, Google Cloud Spanner allows you to configure timeouts and retries using a special JSON configuration file.

Each AWS SDK also implements automatic retry logic (including the SDK for Amazon Simple Queue Service) and allows you to configure the retry settings. Configuration of retry logic is a requirement for a standard retry system.

The webhook rate limiter

Every component in a cloud architecture has limited capacity, including the server they live on. Exceeding this capacity will cause the application to run out of resources and hence shut down. This shut down can be costly to business operations and will often cascade failures to other upstream services. This is why most cloud services are throttled to a fixed API rate limit that consumers must not exceed.

Rate limiting helps reduce traffic and potentially improves throughput by reducing the number of requests/records sent to a service over a given period.

Function within the system

In our architecture, a rate limiter is used to control the consumption of messages from the broker by the pool of webhook consumers. This ensures that the capacity of the consumers is not exceeded, thereby greatly reducing the probability of one of them shutting down.

The rate limiter reads records from the message broker at a controlled rate within the consumer’s capacity (the number of concurrent webhooks that a consumer can process within a given period).

One of the ways the rate limiter ensures that it does not overburden consumers is by sending small amounts of requests frequently instead of large volumes at once. For example, if the consumer can process 100 webhooks per second, our rate limiter can even out the workload by sending 20 webhooks every 200 milliseconds.

Implementation options

Like the retry system, a rate-limiting system is not an off-the-shelf component that can be downloaded and installed. Rate limiters are custom components that can be implemented using different algorithms, each with pros and cons. Rate limiters can also support different sets of throttling rules; for example, rate limiters can throttle by the number of requests, packet size, IP, etc.

It can also be implemented as a separate service (rate limiter middleware) or in the application code. Check out this blog to see how Hookdeck was able to implement a scalable and highly reliable rate limiter for webhooks.

If you do not have the engineering knowledge or resources to implement a rate limiter, you can always subscribe to a cloud provider’s API gateway that offers rate-limiting features.

For example, Amazon API Gateway offers rate-limiting features that can be configured to suit your infrastructure needs.

The webhook logging and monitoring system

To build a reliable and fault-tolerant webhooks processing pipeline, we need to have full visibility into the entire lifecycle of each webhook. Logging and monitoring will help us achieve that.

By tracking processes and errors through the collection of logs and metrics from each component, administrators can quickly and effectively troubleshoot failures and learn the system to make improvements to fine-tune the system’s performance.

We will also adopt tracing mechanisms that help us view all the steps involved in the webhook processing pipeline.

Function within the system

Our architecture makes use of a central logs aggregator and metrics collector. These two pull logs and metrics respectively from each component within the webhook infrastructure.

The logs aggregator collects information like webhook metadata, success or failure of operations, time of operations, error data, reasons for failure, the status of a webhook message within a component, and more.

Our metrics collector, on the other hand, collects usage data for each component within the infrastructure. Information such as memory usage, CPU usage, system availability, rate of failures, the volume of network traffic, number of concurrent requests, and more can be collected to better understand and optimize the system.

The logs and metrics collectors are also integrated with notification systems that help communicate useful information about the components and webhooks to administrators. For example, if a webhook fails multiple times and exceeds its maximum number of retries, an administrator can be alerted to look into the issue and reconcile the webhook within the system.

The alerting system is also used to trigger automated processes like retries.

Implementation options

Logging and monitoring systems can be implemented using open-source technologies like the ELK Stack (Elasticsearch, Logstash, and Kibana) for aggregated logging or Prometheus for metrics collection. Paid log aggregators like Splunk, which help simplify your log collection process, also exist.

These tools come bundled with alerting capabilities that help you configure events that can send notifications to administrators.

These tools also come with visualization features that can be used to build dashboards and reports for effective monitoring. You can also combine your monitoring tools with more advanced visualization tools like Grafana.

Cloud providers also provide their own set of monitoring and logging tools. AWS offers CloudWatch, a full-featured infrastructure monitoring tool that can be used to monitor your AWS resources and applications on AWS and on-premises.

Azure also has the Azure Monitor which helps you increase the performance and availability of your cloud infrastructure by collecting, analyzing, and acting on telemetry from your cloud and on-premises environments.

The webhook consumer

Consumers are web applications that perform the actual processing of webhooks. Their main responsibility is to translate the message in a webhook to work done within the system. Consumers often expose an API endpoint that receives webhooks from external applications or use a broker’s protocol to read messages from the message broker.

Function within the system

The final destination of webhooks in the infrastructure is the webhook consumer. Webhook consumers pull and process messages from the message broker. To spread out the workload, we deploy multiple consumers into a pool of webhook processing workers. These consumers are all subscribed to the broker’s queue and the webhook volume is distributed evenly amongst them.

Consumers also help report errors that occur during webhook processing by generating logs that can be used to trigger corrective actions to mitigate the error. For example, if the health status check for a consumer indicates that the consumer is down, we can trigger the deployment of a new instance or restart the server that went down.

Implementation options/considerations

Consumers can be implemented in different application language technologies and maintained independently from other components.

It is best to isolate webhook consumers into a separate set of servers so their scalability does not depend on the scalability of other components. The more independent and encapsulated the workers, the less impact and dependency on the rest of the system.

Another important principle to note is that queue worker machines should be stateless just like web application servers and web service machines. By having queue workers stateless and isolated to a separate set of machines, you will be able to scale them horizontally by simply adding more servers.

For more on webhook consumers and how they work with a message broker to produce at optimal levels, check out our article entitled "Message Queues: Deep Dive." You should also consider implementing idempotency in your consumers to prevent duplicate processing.

Conclusion

In this article, we have been able to walk through each component: what it does, its purpose within the webhook infrastructure, and different options for implementing it. Having a good understanding of each component helps us deploy it in a way that contributes to the overall success of the system.

The next step is to understand how to get the most out of them.

Performance metrics preview

As mentioned in the logging and monitoring section, it is important to track the way the system is being used, how resources are being utilized, and generally monitor the system’s health and performance.

Performance is mostly measured in terms of the availability of the system, operational throughput, and response time. However, to truly determine how well our system is performing or should perform, we need to define certain performance targets based on our business needs.

A recommended way of doing this is by breaking down performance targets into a set of key performance indicators (KPIs). For example, when measuring the performance of our webhook consumers, we can have the following KPIs:

- The number of webhooks processed per second

- The latency per webhook

- The response time per webhook

- The rate at which consumers generate exceptions, etc.

Performance measurements should also explicitly include a target webhook volume. This helps us to set a benchmark for the assumptions we will be making regarding the webhook load to be expected in the production environment.

Measuring the performance of our system helps us understand the system better and provides useful information for tuning the system for higher efficiency.

In the next title in the series, we will be taking a deep dive into the performance measurements of each component within our webhook infrastructure. We will set performance targets for the system, and collecting metrics to inform us of adjustments that can be made to ensure that we are getting optimal performance from the entire webhook infrastructure.

Gain control over your webhooks

Try Hookdeck to handle your webhook security, observability, queuing, routing, and error recovery.